Load Data from the MashZone NextGen Analytics In-Memory Stores

To load data from

MashZone NextGen Analytics In-Memory Stores, datasets must first be stored using

<storeto> in

MashZone NextGen mashups or stored by external systems. See

Store Data in

MashZone NextGen

Analytics In-Memory Stores for more information and examples.

The basics of using

<loadfrom> to load a dataset stored in an

In-Memory Store that

MashZone NextGen Analytics created dynamically are covered in

Group and Analyze Rows and

Group and Analyze Rows with Row Detail in Getting Started.

You can also:

Load a Dataset from a Declared In-Memory Store

Loading data from a declared In-Memory Store is identical to loading data from a dynamic store except for the form of the store name. Declared store names are compound including both the data source name and the store name.

The following example loads data stored in a declared store named

sample-cache.LegislatorsDeclCache. The data source name is

sample-cache and the store name is

LegislatorsDeclCache. See

Store Data in Declared

In-Memory Stores for an example of the mashup that stores data in this

In-Memory Store.

<mashup xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

xsi:schemaLocation='http://www.openmashup.org/schemas/v1.0/EMML/../schemas/EMMLSpec.xsd'

xmlns='http://www.openmashup.org/schemas/v1.0/EMML'

xmlns:macro='http://www.openmashup.org/schemas/v1.0/EMMLMacro'

name='congressByStateGender'>

<output name='result' type='document' />

<variable name="congress" type="document"/>

<loadfrom cache='sample-cache.LegislatorsDeclCache' variable='congress' />

<raql outputvariable='result'>

select firstname, lastname, state, party, gender from congress

order by state, gender

</raql>

</mashup>

Load Datasets from a Dynamic External In-Memory Store

With dynamic In-Memory Stores that are created and data is stored by external systems, there is no configuration file to provide connection information or information on the data in the dataset. Instead, MashZone NextGen administrators must add configuration for external dynamic stores to allow MashZone NextGen Analytics to connect to the store and allow RAQL to work with the dataset.

Once a connection has been made, you can load data from dynamic external stores just like any store, except for the form of the store name. Store names for dynamic external stores are compound names including both the connection name and the store name (the cache name).

Important: In addition to the in-memory store, the Terracotta Management Console (TMC) must also be running to successfully connect to a dynamic external in-memory store.

The following example loads data stored in a dynamic external store named ApamaExternalBigMemory.SampleDynamicCache.

The connection name is ApamaExternalBigMemory. The store name assigned by the external system when it created the store is SampleDynamicCache.

<mashup xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

xmlns='http://www.openmashup.org/schemas/v1.0/EMML'

xmlns:macro = "http://www.openmashup.org/schemas/v1.0/EMMLMacro"

name='SampleOfExternalCache'>

<output name='result' type='document'/>

<variable name="simpleData" type="document" stream="true"/>

<loadfrom cache="ApamaExternalBigMemory.SampleDynamicCache"

variable="$simpleData" />

<raql outputvariable="result" >

select * from simpleData

</raql>

</mashup>

Load Dataset Rows for Specific Time Periods

In many cases, analysis is only interested in the most recent updates to a dataset. You can load specific rows from a dataset stream stored in an In-Memory Store based on a time interval starting backwards from now, using the period attribute in the <loadfrom> statement.

You specify the recent time period for the rows you want to load as a number of seconds, minutes, hours, days or weeks. See

<loadfrom> for the syntax to use.

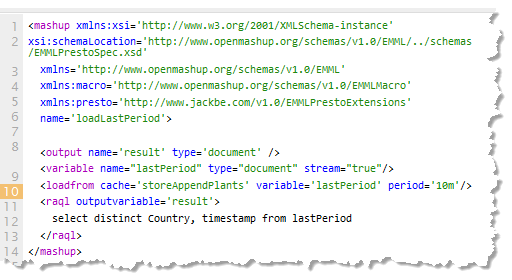

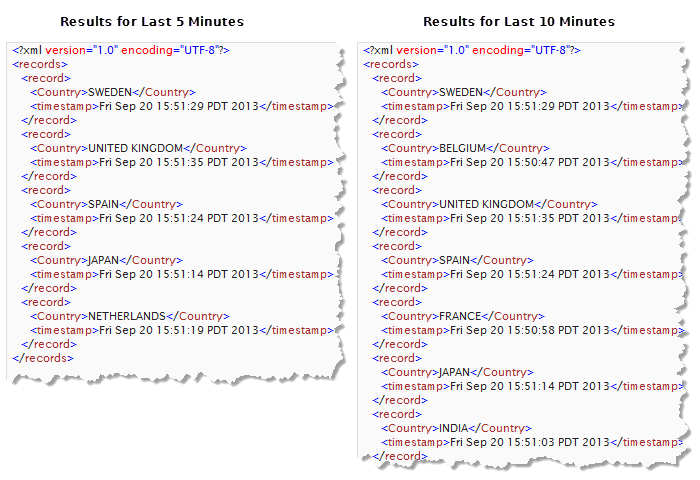

The following example works in conjunction with the example shown in

Append Query Results Repeatedly to illustrate the effect of retrieving dataset rows based on a recent time period:

It retrieves all rows from the storeAppendPlants in-memory store that were added within the last period of time set in the period attribute and then selects the list of distinct countries within those rows and the timestamp. The following examples of results for different time periods shows which rows were loaded:

To try this example, use the following

EMML code for this

loadLastPeriod mashup and open it in

Mashup Editor. Also create or open the

storeAppend mashup shown in

Append Query Results Repeatedly. Then run

storeAppend. Wait a few seconds or minutes, update the value of

period in

loadLastPeriod and run it to see which rows it loads.

<mashup xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

xsi:schemaLocation='http://www.openmashup.org/schemas/v1.0/EMML/../schemas/EMMLSpec.xsd'

xmlns='http://www.openmashup.org/schemas/v1.0/EMML'

xmlns:macro='http://www.openmashup.org/schemas/v1.0/EMMLMacro'

name='loadLastPeriod'>

<output name='result' type='document' />

<variable name="lastPeriod" type="document" stream="true"/>

<loadfrom cache='storeAppendPlants' variable='lastPeriod' period='5m' />

<raql outputvariable='result'>

select distinct Country, timestamp from lastPeriod

</raql>

</mashup>

Use Pagination to Fine Tune Loading Performance

By default, datasets are streamed using a default fetch size to define how many rows are loaded at a time. Aggregate calculations are not completed until the entire dataset is loaded.

You can fine tune the page size used with the fetchsize attribute. This can improve performance during loadng. For example:

<mashup xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

xsi:schemaLocation='http://www.openmashup.org/schemas/v1.0/EMML/../schemas/EMMLSpec.xsd'

xmlns='http://www.openmashup.org/schemas/v1.0/EMML'

xmlns:macro='http://www.openmashup.org/schemas/v1.0/EMMLMacro'

name='congressByStateGender'>

<output name='result' type='document' />

<variable name="congress" type="document"/>

<loadfrom cache='sample-cache.LegislatorsDeclCache'

fetchsize='10000' variable='congress' />

<raql outputvariable='result'>

select firstname, lastname, state, party, gender from congress

order by state, gender

</raql>

</mashup>

Handle Missing In-Memory Stores

Since In-Memory Stores can be created on demand, errors can occur in mashups that load datasets if the store is missing unexpectedly. One way to avoid this is to have a mashup optionally store the dataset if it is not present using the <try> and <catch> statements in EMML.

The following example uses a memoization pattern to try to load the dataset and if not found then load the data from an original data source, store it and continue the analysis.

Note: This example uses data from an Atom feed from the USGS on recent earthquakes.

The EMML and RAQL code for this single mashup is shown here:

<mashup xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

xsi:schemaLocation='http://www.openmashup.org/schemas/v1.0/EMML/../schemas/EMMLSpec.xsd'

xmlns='http://www.openmashup.org/schemas/v1.0/EMML'

xmlns:macro='http://www.openmashup.org/schemas/v1.0/EMMLMacro'

name='tryLoadCatch'>

<output name='result' type='document' />

<variable name="quakesDS" stream="true" type="document"/>

<try>

<loadfrom cache="quakeLastWeek" variable="quakesDS"/>

<raql outputvariable="result">

select title, date, lat, long from quakesDS

</raql>

<display message="dataset found"/>

<catch type='EMMLException e'>

<variable name="quakeSrc" type="document"/>

<variable name="quakeStore" stream="true" type="document"/>

<directinvoke

endpoint='http://earthquake.usgs.gov/earthquakes/feed/atom/4.5/week'

method='GET' stream='true' outputvariable='quakeSrc'/>

<raql outputvariable="quakeStore" stream="true">

link.href as link,

split_part(point,' ','1') as lat,

split_part(point,' ','2') as long,

concat(category.term,' magnitude') as mag from quakeSrc/feed/entry

</raql>

<storeto cache="quakeLastWeek" key="#unique" variable="quakeStore"/>

<display message="key stored"/>

<loadfrom cache="quakeLastWeek" variable="quakesDS"/>

<raql outputvariable="result">

select title, date, lat, long from quakesDS

</raql>

</catch>

</try>

</mashup>

The <try> loop tries to retrieve the dataset stream from an existing In-Memory Store. If the store already exists, it executes a simple RAQL query.

The <catch> portion of the loop will catch the exception thrown if the store is not found in the <try> loop. It then invokes the REST mashable, queries the results and loads the dataset to the store.

If you run this mashup in the Mashup Editor, look at the Console section to see the messages output from the <display statements in the <try> and <catch> loops. The first time you run the mashup you should see the <catch> message. Run it again and you should see the message from <try>.