Scenario: "I want to use Windows NLB for my high availability cluster."

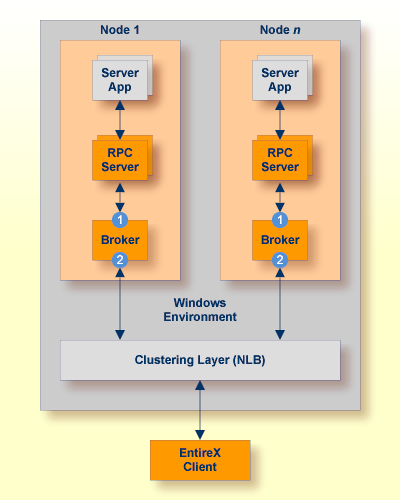

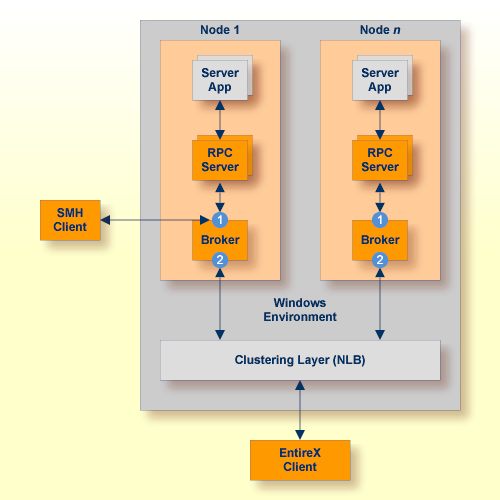

Segmenting dynamic workload from static server and management topology is critically important. Using broker TCP/IP-specific attributes, define two separate connection points:

One for RPC server-to-broker and admin connections. ![]()

The second for client workload connections. ![]()

See TCP/IP-specific Attributes under Broker Attributes. Sample attribute file settings:

| Sample Attribute File Settings | Note | |

|---|---|---|

PORT=1972 |

In this example, the HOST is not defined, so the default setting will be used (localhost).

|

|

HOST=10.20.74.103 (or DNS) PORT=1811 |

In this example, the HOST stack is the virtual IP address. The PORT will be shared by other brokers in the cluster.

|

We recommend the following:

Share configurations - you will want to consolidate as many configuration parameters as possible in the attribute setting. Keep separate yet similar attribute files.

Isolate workload listeners from management listeners.

Monitor Brokers through SMH.

The network load balancing service for all the machines should have the correct local time. Ensure the Windows Time Service is properly configured on all hosts to keep clocks synchronized. Unsynchronized times will cause a network login screen to pop up which doesn't accept valid login credentials.

You have to manually add each load balancing server individually to the load balancing cluster after you've created a cluster host.

To allow communication between servers in the same NLB cluster, each server requires the following registry entry: a DWORD key named "UnicastInterHostCommSupport" and set to 1, for each network interface card's GUID (HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services\WLBS\Parameters\Interface{GUID})

NLB may conflict with some network routers, which are not able to resolve the IP address of the server and must be configured with a static ARP entry.

In addition to broker redundancy, you also need to configure your RPC servers for redundant operations. We recommend the following best practices when setting up your RPC servers:

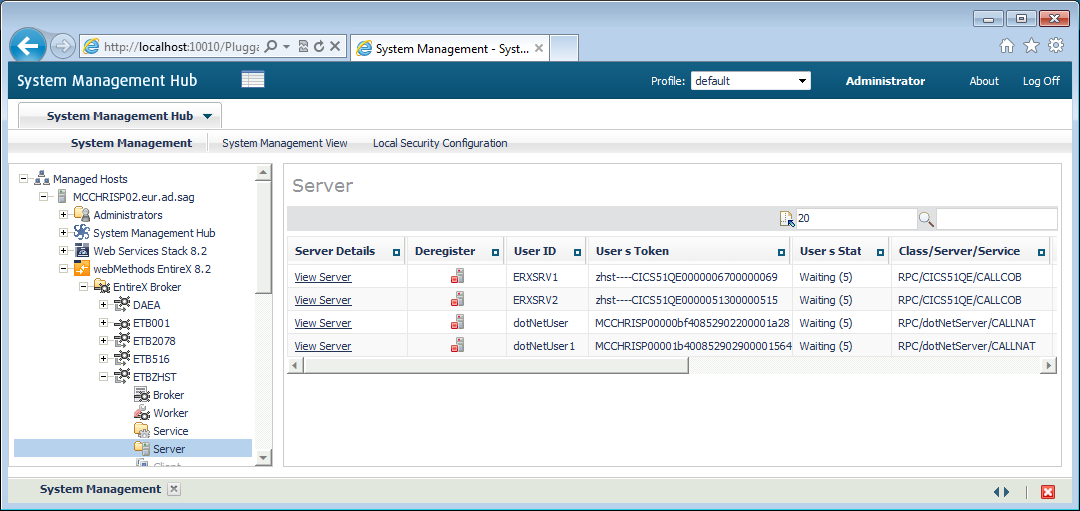

Make sure your definitions for CLASS/SERVER/SERVICE are identical

across the clustered brokers. Using identical service names will allow the

broker to round-robin messages to each of the connected RPC server instances.

For troubleshooting purposes, and if your site allows this, you can optionally use a different user ID for each RPC server.

RPC servers are typically monitored using SMH as services of a broker. Optionally, for example for troubleshooting purposes, the RPC servers can be configured with a unique TCP port number for SMH.

Note:

SMH port is not supported by the Natural RPC server.

Establish the broker connection using the static Broker name:port definition.

Maintain separate parameter files for each Natural RPC Server instance.

Use the System Management Hub to monitor the

status of the broker and RPC server instances using their respective

address:port connections. Set up each connection with

logical instance names.

The following screen shows two pairs of redundant RPC servers registered to the same broker from the Server view:

Each broker requires a static TCP port for RPC server and management communications.

This must be maintained separately from the broker's virtual IP address configuration.

An important aspect of high availability is during planned maintenance events such as lifecycle management, applying software fixes, or modifying the number of runtime instances in the cluster. Using a virtual IP networking approach for broker clustering allows high availability to the overall working system while applying these tasks.

Broker administrators, notably on UNIX and Windows systems, have the need to start, ping (for Broker alive check) and stop Broker as well as RPC servers from a system command-line, prompt or from within batch or shell scripts. To control and manage the life cycle of brokers, the following commands are available:

etbsrv BROKER START <broker-id>

etbsrv BROKER PING <broker-id>

etbsrv BROKER STOP <broker-id>

etbsrv BROKER RESTART <broker-id>

If only one user is to be permitted to execute commands, enter the command

etbsrv SECURITY ENABLE TRUSTED-USER=YES

The trusted user can then execute commands without any additional authentication. There can only be one trusted user.

To change the trusted user, modify the property install.user in file entirex.config.

See Administration Service Commands in the Windows Administration documentation for more information.

![]() To start an RPC server

To start an RPC server

See Starting the RPC Server for UNIX | Windows | Java | .NET | XML/SOAP | Micro Focus | IMS Connect | CICS ECI | WebSphere MQ.

![]() To ping an RPC server

To ping an RPC server

Use the following Administration Service command:

etbsrv BROKER PINGRPC <broker-id> <class/server/service>

Return code 0 means the broker is running; any other value means the broker has stopped.

![]() To stop an RPC server

To stop an RPC server

See Stopping the RPC Server for UNIX | Windows | Java | .NET | XML/SOAP | Micro Focus | IMS Connect | CICS ECI | WebSphere MQ.

You can also use the command-line utility etbcmd. Example:

etbcmd -b <broker-id> -d SERVICE -o IMMED -m <class/server/service>

All hosts in the NLB cluster must reside on the same subnet and the cluster's clients are able to access this subnet.

When using NLB in multicast or unicast mode, routers need to accept proxy ARP responses (IP-to-network address mappings that are received with a different network source address in the Ethernet frame).

Make sure the Internet control message protocol (ICMP) to the cluster is not blocked by a router or firewall.

Cluster hosts and the virtual cluster IP need to have dedicated (static) IP addresses. This means you must request static IPs from your Network Services group.

NLB clustering is a stateless failover environment that does not provide application or in-flight message recovery.

Only TCP/IP is configured on the network interface that the NLB is configured for.