Communication Drivers

Overview

JavaScript communication drivers use streaming techniques or long polling, as required.

For a full list, please see the JavaScript API Documentation for Drivers.

The following links provide a basic description of the main techniques employed by these drivers:

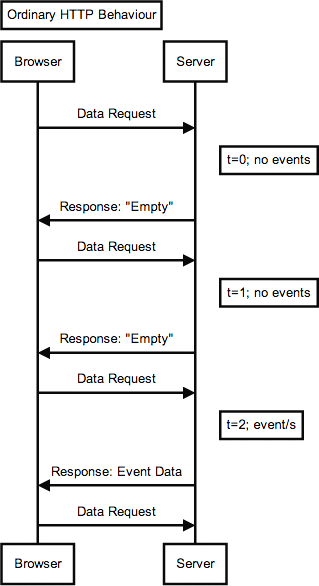

Standard HTTP Polling

Most non-Universal Messaging web applications make use of repeated, standard HTTP polling requests. Such application requests/responses look like this:

The Universal Messaging JavaScript API is more efficient than this. It implements several underlying drivers for communication between a Universal Messaging JavaScript client and a Universal Messaging realm server. These drivers can be conceptually divided into:

streaming drivers

long polling drivers

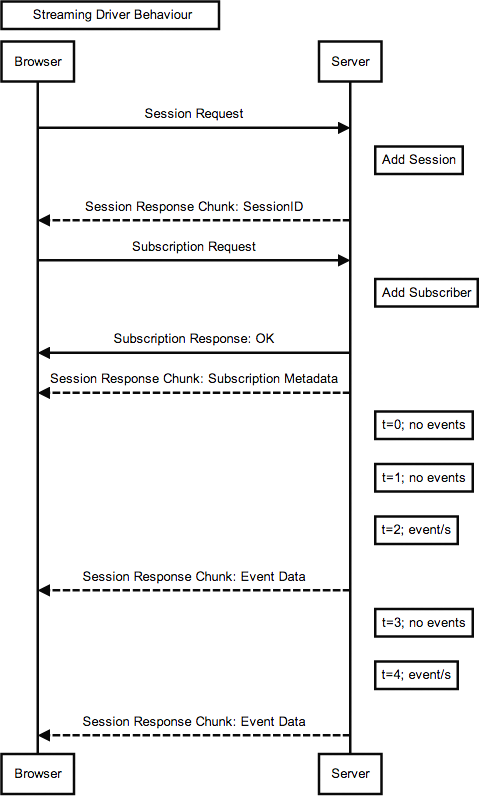

Streaming Drivers

The Streaming drivers implemented in Universal Messaging take advantage of various technologies implemented in different web browsers, and various mechanisms to achieve HTTP server push or HTTP streaming. These technologies and mechanisms include HTML5 Web Sockets, chunked XMLHTTPRequest and XDomainRequest responses, EventSource/SSE, iFrame Comet Streaming and more.

The fundamental difference between Universal Messaging JavaScript API's Streaming drivers and standard HTTP polling is that the Universal Messaging realm server will not terminate the HTTP connection after it sends response data to the client. The connection will remain open so that if additional data (such as Universal Messaging events) becomes available for the client, it can immediately be delivered to the client without having to be queued and without having to wait for the client to make a subsequent request. The client can interpret the "partial" response chunks as they arrive from the server.

This is much more efficient than standard HTTP polling, since the client need only make a single HTTP request, yet receive ongoing data in a single, long lived response.

Streaming drivers are the preferred drivers to use in a Universal Messaging JavaScript application. Do note, however, that some environments may limit the successful use of streaming drivers (such as intermediate infrastructure with poorly configured client-side proxy servers or reverse proxy servers). In these instances, clients can be configured to fall back to a Long Polling driver (which can be considered a driver "of last resort").

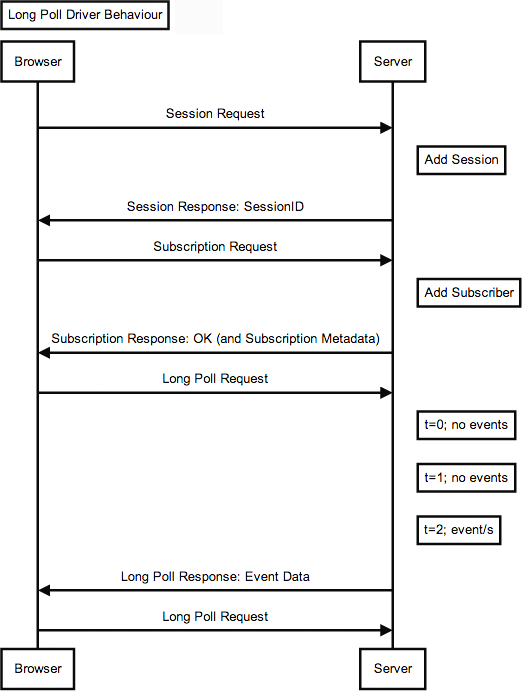

Long Polling Drivers

When using a Long Polling driver, a Universal Messaging client requests information from the realm server in a similar way to a normal HTTP poll. The primary difference is that if the server does not have any information for the client at that time, then instead of sending an empty response and closing the connection, the server will instead hold the request and wait for information (for example, Universal Messaging events) to become available. Once information is available for the client, the server completes its response and closes the connection. The Universal Messaging client will then immediately make a new Long Poll request for the next batch of information:

Clearly, if information is constantly being provided, then a client will end up making very frequent Long Poll requests (potentially as frequently as it would with a normal HTTP poll approach). The Universal Messaging realm server can be configured to delay responding and closing a connection for as long as desired (thereby allowing administrators the option of making requests be fulfilled as soon as data is available, or waiting for a time period, allowing information to accumulate further, before responding - this latter technique is recommended if a client is likely to receive many events per second).

Long Polling drivers are therefore not true "push" drivers, and should only be used under circumstances where real push is not possible (owing, for instance, to intermediate infrastructure such as poorly configured client-side proxy servers or reverse proxy servers).

Infrastructural Issues and Workarounds

Universal Messaging JavaScript clients are intended to receive data in near real-time. To facilitate this, such clients will tend to use one of the API's Streaming drivers (as opposed to a basic, repetitive HTTP polling).

In most environments, a Streaming driver - which works over HTTP - can be used without problem. In some environments, however, infrastructure components can interfere with the real-time HTTP streams. Here we will look at some possible causes of interference, and discuss how to work around them.

Client-Side Proxy Servers

Most client side proxy servers will permit a client to make a long lived connection to a Universal Messaging realm server (to see why a long-lived connection is important, please see the discussion of Streaming drivers above).

In some environments, however, a proxy server might interrupt these connections. This might be, for example, because:

the proxy server wishes to "virus check" all content it receives on behalf of its clients. In this case the proxy server may buffer the streaming response in its entirety before "checking" it and delivering it to the client. Since the response is, essentially, unending, the proxy will never deliver any such content to the client and the client will be forced to reset and try again. If the client has been configured to try other drivers, it will eventually fall back to a long-polling driver (which should work perfectly well in such an environment).

some companies may limit the size of responses which the proxy server will handle. In this case, the proxy server will forcefully close the connection. As a result, a Universal Messaging JavaScript client will automatically attempt to re-initialise the session, and gracefully continue. This should not affect usability too much (though clients will experience an unnecessary disconnect/reconnect cycle).

some companies may limit the time for which a connection can remain open through the proxy. Again, a Universal Messaging JavaScript client will automatically work around this as above.

It is strongly recommended that you use SSL-encrypted HTTP for your Universal Messaging applications. Many proxy servers will allow SSL-encrypted HTTP traffic to pass unhindered. This will ensure that the greatest number of clients can use an efficient streaming driver.

Reverse Proxy Servers and Load Balancers

If your infrastructure includes a reverse proxy server or a load balancer, it is important to ensure that "stickiness" is enabled if you are using this infrastructure to front more than one Universal Messaging realm server. That is, requests from an existing client should always be made to the same back end Universal Messaging realm server.

Most load balancers offer stickiness based either on client IP address or using HTTP cookies. Client IP based stickiness can work well (though be aware that some clients may be making requests from different IP addresses, if, for instance, they are behind a bank of standard proxy servers in their own environment). Cookie-based stickiness will work well for most drivers (though note that some drivers, notably the "XDR" drivers which are based on Microsoft's XDomainRequest object, do not support cookies - please see the JavaScript API Documentation for Drivers for more information).

Choosing Appropriate Drivers for Your Environment

While the default set of drivers work well in a simple environment where browsers connect directly to the server without intermediate infrastructure such as ill-configured proxy servers or overly-aggressive antivirus products, in some cases you may wish to customise the driver set to minimise issues with clients behind such infrastructure.

In summary, to minimise rare but lengthy delays where present-day client infrastructure interferes with session initialisation, use the following set:

XHR_STREAMING_CORS

XDR_STREAMING

IFRAME_STREAMING_POSTMESSAGE

EVENTSOURCE_STREAMING_POSTMESSAGE

XDR_LONGPOLL

XHR_LONGPOLL_CORS

XHR_LONGPOLL_POSTMESSAGE

JSONP_LONGPOLL

AND use HTTPS with SSL certificates for the servers on which you deploy your HTML/JS and for all the UM servers.

More Details

Unless configured otherwise, Universal Messaging JavaScript clients will attempt to use the following drivers, in decreasing order of preference:

WEBSOCKET: Streaming driver for browsers supporting HTML5 Web Sockets.

XHR_STREAMING_CORS: Streaming driver for browsers supporting XMLHTTPRequest with CORS (Cross-Origin Resource Sharing). Intended for Chrome, Firefox, Safari and IE10+

XDR_STREAMING: Streaming driver for browsers supporting XDomainRequest (Microsoft Internet Explorer 8+). Intended for IE8 and IE9. Note that XDomainRequest and hence the XDR_STREAMING driver can not send client cookies to the server.

IFRAME_STREAMING_POSTMESSAGE: Streaming driver for browsers supporting the cross-window postMessage API (per https://developer.mozilla.org/en/DOM/window.postMessage). Intended for Chrome, Firefox, Safari and IE8+.

EVENTSOURCE_STREAMING_POSTMESSAGE: Streaming driver for browsers supporting both Server-Sent-Events and the cross-window postMessage API.

XDR_LONGPOLL: Longpoll driver for browsers supporting XDomainRequest (Microsoft Internet Explorer 8+). Intended for IE8 and IE9. Note that XDomainRequest and hence the XDR_STREAMING driver can not send client cookies to the server.

XHR_LONGPOLL_CORS: Longpoll driver for browsers supporting XMLHTTPRequest with CORS (Cross-Origin Resource Sharing). Intended for Chrome, Firefox, Safari and IE10+.

XHR_LONGPOLL_POSTMESSAGE: Longpoll driver for browsers supporting the cross-window postMessage API. Intended for Chrome, Firefox, Safari and IE8+.

NOXD_IFRAME_STREAMING: Legacy non-cross domain streaming driver for older clients requiring streaming from the server that serves the application itself. Intended for Chrome, Firefox, Safari and IE6+.

JSONP_LONGPOLL: Longpoll driver for older browsers relying on DOM manipulation only (no XHR or similar required). Intended for Chrome, Firefox, Safari and IE6+.

The vast majority of clients settle on one of the first three streaming drivers.

As outlined in the API documentation, the developer can override the driver set and preference order. This is rarely recommended, however, unless a significant proportion of clients are located behind infrastructure which interrupt communication based on the typically preferred drivers. We shall explain how such interruptions can manifest themselves for each of these driver types.

Firstly, a little more detail on how driver failover works for the JavaScript API.

We will first look at how a client communicates with a single UM server:

A client browser first checks whether it supports the underlying technologies on which the current driver is based. If it does not, then it removes the driver from its list of possible drivers and will never attempt to use it again.

If the browser successfully initialises a session with the server, then it will always try to use the same driver thereafter, and will NOT fail over to a different driver (unless the user reloads the application).

If a browser does support the driver, then the driver gets 3 consecutive attempts to initialise a session. The first attempt happens immediately. The second connection attempt has a delay of 1 second. The third attempt has a delay of 2 seconds. This is to avoid compounding problems on a server that may be undergoing maintenance or other load issues (this is of particular importance if an environment supports many users, or has been configured to use only a single realm server).

After the third failure, if the client's session object has been configured with more than one driver (or if it is using the default set), then it will switch to the next driver, and will once again immediately try to connect and initialise a session. Such drivers' second and third attempts are subject to the same introduced delays as described above.

Next, let us look at how a client communicates with a cluster of UM servers:

When attempting to initialise a session with a server within a cluster, then a client will go through all drivers until one works, as described above. A side effect of this is that if the first server with which the client attempts to communicate is unresponsive, then one can expect a delay of at least (number_of_supported_drivers * 3 seconds) plus any underlying request timeouts before the client switches to the next UM server. In a worst-case scenario this could lead to a delay of 20 seconds or more, which is far from desirable.

This delay would be this considerable only when the first UM server the client attempted to use was unavailable. Had the first UM server been available, and subsequently become unavailable (thus disconnecting the browser), the browser will switch to a second realm server considerably more quickly, because:

If the browser gets a confirmed session to server X, then - as explained earlier - it will always try to use that driver thereafter.

If, having had a confirmed session to server X it gets disconnected from server X, then it will continue retrying to connect to server X with the same driver for a maximum of 5 consecutive failed attempts (any successful connection will reset the failure count to 0). If the 5th attempt fails, the browser will consider this server to be unavailable, and will switch to the next server, re-enable *all* drivers, and start cycling through them again (giving each one 3 chances to connect as usual). On the assumption that the second realm server is indeed available, a client would, at this point, reconnect immediately.

So one key here is to avoid including realms servers in a client's session configuration object if they are known to be not available pre-session-initialisation. This can be done by dynamically generating session configuration objects based upon the availability of back-end servers (though this is beyond the scope of the UM API).

An alternative approach to avoid such a delay would be to lower the number of potential drivers a browser could attempt - but this will doubtlessly lead to fewer clients being able to connect, so is not recommended.

Finally, let's look at the individual drivers themselves. All notes below are for traffic over HTTP (not HTTPS):

WEBSOCKET Streaming driver for browsers supporting HTML5 Web Sockets.

In a typical production deployment, one would expect the majority of external client browsers to not be able to communicate using WebSockets. There are several reasons for this:

Firstly, a significant proportion of end users are using web browsers that do not yet support the WebSocket protocol (e.g. around 30% of users are on IE9 or earlier, with no WebSocket support).

Secondly, users behind corporate proxy servers will, at present, find that the proxy server will in all likelihood not support the WebSocket protocol, even if their browser does; this is expected to change, but it will take years, not months.

Thirdly, many companies wish to deploy their UM servers behind standard reverse proxy servers or load balancers such as Oracle iPlanet (which, like the majority of such products, does not support WebSockets either); again, WebSocket support will appear in such products, but it is simply not there today.

Fourthly, a client-side antivirus product can interfere with the browser's receipt of real-time data sent down a WebSocket response. This is common, for example, for clients using Avast's WebShield functionality - these clients usually have to be configured to use a long polling driver unless they configure their antivirus product to ignore traffic from specific hosts. The issue is that the antivirus product attempts to buffer the entire response so that it can process it for viruses and the like before giving it to the client. Avast upgraded their product to better handle WebSocket last year, and as part of that process they have whitelisted a number of well-known sites that use WebSocket, but may be still being a little overzealous with WebSocket connections to hosts they don't know.

These issues in combination make it more likely than not that a corporate web user will be unable to use WebSockets.

That said, if a browser doesn't support WebSocket, it will fail over to the next driver immediately. On the other hand, if the browser does support WebSockets, but an intermediate proxy or reverse proxy server doesn't, then the WebSocket HTTP handshake would, in some circumstances, result in the proxy immediately returning an error response to the browser. Under these conditions, the browser will retry the WebSocket driver a maximum of three times (incurring the "3 second" delay as per the "back-off" rules described above) before failing over to the next driver. The worse case scenario is a client using a proxy that simply ignores the WebSocket HTTP Protocol Upgrade handshake; here you are at the mercy of the client timing out, as the UM server would never receive the WebSocket upgrade request. Although you can set these timeouts to whatever values you wish, the default values are set to support clients on slow connections - lowering them will cause clients on slow connections to frequently fail to initialise sessions.

For client environments that support it, WebSocket is an excellent choice of driver. Whether you wish to risk a 3-second delay (or, in the rare cases described above, much higher) for those client environments that don't support it is down to your distribution of customers. If you are building applications targeting customers whose infrastructure you understand, then it is worth using the WebSocket driver. If the audience is more generally distributed, then a good proportion of the clients that are unable to use WebSockets will incur a session initialisation delay. You may therefore wish to exclude the WEBSOCKET driver from your application's session configuration.

XHR_STREAMING_CORS Streaming driver for browsers supporting XMLHTTPRequest with CORS (Cross-Origin Resource Sharing). Intended for Chrome, Firefox, Safari and IE10+.

Unlike WebSocket, this driver does not rely on anything other than standard HTTP requests and a streaming HTTP response. If the client supports the driver, the only issues here are intermediate infrastructure components interfering with the real-time stream of data sent from the UM server to the client:

An intermediate proxy server may choose to buffer the streamed response rather than streaming it directly to the client in real-time. Some have a habit of buffering all un-encrypted traffic for virus-scanning purposes, thus preventing clients from using streaming drivers of any kind. This is not very common, but it does happen.

More common is the interruption of a streamed response by client-side antivirus products like Avast's WebShield (see above, in the WebSocket driver discussion). In these cases, the browser would not receive the session initialisation response (as it is being buffered by the antivirus product), and would eventually time out. This is much slower than failing immediately.

XDR_STREAMING Streaming driver for browsers supporting XDomainRequest (Microsoft Internet Explorer 8+). Intended for IE8 and IE9. Note that XDomainRequest and hence the XDR_STREAMING driver can not send client cookies to the server.

Although IE8 and IE9 support the XHR object, they cannot use the XHR object to make cross-domain requests. This driver therefore instead uses a Microsoft-specific object called the XDomainRequest object which can support streaming responses to fully cross-domain requests.

As mentioned in its description, this driver can not send client cookies to the server. This is because Microsoft chose to prevent cookies being sent via the XDR object. As a result, any intermediate DMZ infrastructure (such as a load balancer) that relies on client-side HTTP cookies to maintain, for example, load balancer server "stickiness", will be unable to maintain stickiness (since no cookies that the load balancer might set will appear in subsequent client requests when using an XDR based driver). If the load-balancer is fronting more than one UM server, then this setup can result in load balancers sending a client's post-session-initialisation requests, such as a subscription request, to a random back-end UM server rather than to the server with which a client had initialised a (load-balancer-proxied) session. This will cause a client to reinitialise their session, and repeat ad infinitum. In these cases, if load balancers are indeed using cookie-based stickiness, then you have two options: either explicitly configure JavaScript sessions with a set of drivers that exclude all XDR variants, or change the load balancer configuration to use client IP-based stickiness instead of cookie-based stickiness instead

IFRAME_STREAMING_POSTMESSAGE Streaming driver for browsers supporting the cross-window postMessage API (per https://developer.mozilla.org/en/DOM/window.postMessage). Intended for Chrome, Firefox, Safari and IE8+.

In all likelihood, a client browser will settle on one of the three drivers discussed above before it would fail over to this driver. This driver is really only of use in environments where, for some reason, a browser is unable to create an instance of an XHR object. This used to be the the case in some older versions of IE if, for example, a Windows Administrator's policy prevented IE from being able to invoke ActiveX objects (such as the XMLHTTPRequest object). In modern versions of IE, however, XMLHTTPRequest is a native non-ActiveX object so this is less of an issue.

The downsides of this driver are the same as those for the XHR_STREAMING_CORS driver: proxy and antivirus product interference.

EVENTSOURCE_STREAMING_POSTMESSAGE Streaming driver for browsers supporting both Server-Sent-Events and the cross-window postMessage API.

This driver is really only useful to a small subset of modern browsers such as Opera. It does not rely on any unusual HTTP behaviour, and is therefore only subject to the same negatives as the other streaming drivers: proxy and antivirus product interference.

XDR_LONGPOLL Longpoll driver for browsers supporting XDomainRequest (Microsoft Internet Explorer 8+). Intended for IE8 and IE9. Note that XDomainRequest and hence the XDR_STREAMING driver can not send client cookies to the server.

This is the most efficient long-polling driver for IE8 and IE9. Its downside is the lack of cookie support. This is only an issue if dealing with certain load balancer configurations (see discussion of XDR_STREAMING).

XHR_LONGPOLL_CORS Longpoll driver for browsers supporting XMLHTTPRequest with CORS (Cross-Origin Resource Sharing). Intended for Chrome, Firefox, Safari and IE10+.

This is the most efficient long-polling driver for non IE-browsers and for IE10+.

As with all long polling drivers (including XHR_LONGPOLL_POSTMESSAGE and JSONP_LONGPOLL discussed below), the browser will maintain an open connection to the server until data is available for transport to the browser. As soon as data arrives for delivery to the browser, the server will wait for a short period of time (known as the server-side "Long Poll Active Delay" timeout, which defaults to 100ms) before sending the data to the browser, and closing the connection. The browser will then immediately make a new long-poll connection to the server, in preparation for more data. Since the server closes the connection immediately after sending any data, any intermediary proxy servers or antivirus products will not be buffering the response in the same was as they might buffer a streaming response, but will instead immediately relay the response to the browser.

Note that if no data is available to be sent to the browser (if, say, the client is subscribed to a channel which rarely contains events) then the long-poll connection will remain open for a limited period before being closed by the server (at which point the browser will automatically create a new long-poll request and wait for data once again). The length of time for which such "quiet" connections stay open is defined by the "Long Poll Idle Delay" value, which can be set using Enterprise Manager (see the JavaScript tab for the relevant Interface). It is important that this value is lower than the value of any intermediary proxy servers' own timeouts (which are often 60 seconds, but sometimes as low as 30 seconds). A suitable production value for "Long Poll Idle Delay" might therefore be 25000ms (25 seconds).

This driver, like all long polling drivers, is good for browsers that want low-latency but infrequent updates. Long polling drivers are not a good choice for browsers that receive a continual stream of many messages per second, however (as the browser may end up making several requests per second to the server, depending upon the "Long Poll Active Delay" value). In such cases it would be prudent to increase the "Long Poll Active Delay" value significantly from its default value of 100ms, to perhaps 1000ms or more (while acknowledging that the browsers using a long polling driver in such a scenario would no longer be receiving "near-real-time" data). For browsers that are instead subscribed to channels (or members of datagroups) that have relatively infrequent updates, the "Long Poll Active Delay" can potentially be lowered to 0, resulting in real-time delivery despite the use of a long-polling driver.

XHR_LONGPOLL_POSTMESSAGE Longpoll driver for browsers supporting the cross-window postMessage API. Intended for Chrome, Firefox, Safari and IE8+.

This is the most efficient long-polling driver for non IE-browsers that do not support CORS, and is a cookie-supporting alternative to XDR_LONGPOLL for IE8 and IE9. See the XHR_LONGPOLL_CORS discussion for details on how long polling functions.

NOXD_IFRAME_STREAMING Legacy non-cross domain streaming driver for older clients requiring streaming from the realm that serves the application itself. Intended for Chrome, Firefox, Safari and IE6+.

This is a streaming driver that is subject to the same issues as the other streaming drivers: proxy and antivirus product interference.

In addition, unlike all the other drivers, which are fully cross-domain (allowing communication to a UM server on a different domain to the server that serves the applications HTML/JS), this driver only permits communication back to the same server that served the HTML/JS. This implies that the application's HTML/JS must be served from a file plugin on the UM server in question.

JSONP_LONGPOLL Longpoll driver for older browsers relying on DOM manipulation only. Browser will show "busy indicator/throbber" when in use. Intended for Chrome, Firefox, Safari and IE6+.

This is the least efficient long-poll driver, but the most likely one to work in all circumstances. See the XHR_LONGPOLL_CORS discussion for details on how long polling functions.

Dealing with Intermediate Infrastructure Issues

As you can see, proxy servers are a particular bane for streaming HTTP traffic. Happily, practically all of these issues can be mitigated simply by using HTTPS instead of HTTP. In these cases, a proxy server will blindly proxy the HTTPS traffic without attempting to understand or modify the HTTP conversation contained within, and without interfering with it. If, therefore, you deploy your application on an HTTPS server, and install SSL certificates on your UM servers, you should be able to use the default set of drivers with minimal problems.

We would also point out that in our experience all production deployments at our customers' sites are secured with HTTPS (SSL or TLS). Besides helping browsers behind certain proxy servers maintain persistent connections that would otherwise be interrupted by naively configured proxy servers (perhaps buffering for virus checking), or by misconfigured or old proxies (that cannot understand WebSocket Protocol Upgrade handshakes), HTTPS offers clear security benefits and improves usability.

Working around client-side antivirus products is a little more difficult, unfortunately. One option might be to offer a link to a "long-poll-only" version of the application, and to show this link on screen only when a client is attempting to initialise a session for the first time. For clients which successfully initialise a session using a streaming driver, the link will be replaced with actual application content in no time, but for clients having problems, the ability to click a "click here if you're having problems connecting" is probably sufficient.

Other "workarounds", such as remembering the driver that worked for a browser as a value within a persistent cookie (so that it can be reused automatically thereafter), are risky; a client can be a laptop which subsequently connects via a proxy with a completely different configuration, for example. Such approaches are best not relied upon.

It is a shame to abandon WebSocket given its clear benefits, but in a production deployment we would, for the sake of simplicity, suggest the following as a useful custom set of drivers:

XHR_STREAMING_CORS

XDR_STREAMING

IFRAME_STREAMING_POSTMESSAGE

EVENTSOURCE_STREAMING_POSTMESSAGE

XDR_LONGPOLL

XHR_LONGPOLL_CORS

XHR_LONGPOLL_POSTMESSAGE

JSONP_LONGPOLL

This basically leaves out WEBSOCKET because of the risk of a prolonged "failure" time for a minority of clients behind incompatible proxies, and also leaves out the "legacy" NOXD_IFRAME_STREAMING driver since this is the only non-cross-domain driver, and it is likely that any production application's HTML/JS would benefit from being deployed on a static WebServer rather than on a file plugin within the messaging server.

If your DMZ infrastructure includes load balancer components that rely on HTTP cookies for "stickiness", then the XDR-based drivers should not be used (see the discussion of XDR_STREAMING above). In this case, the set of custom drivers would be best reduced to:

XHR_STREAMING_CORS

IFRAME_STREAMING_POSTMESSAGE

EVENTSOURCE_STREAMING_POSTMESSAGE

XHR_LONGPOLL_CORS

XHR_LONGPOLL_POSTMESSAGE

JSONP_LONGPOLL

What Drivers Should I Use?

This example can help you choose a suitable configuration:

Are your application's clients typically behind proxy servers? | Yes |

Is your Universal Messaging server behind a Load Balancer? | No, we do not use a Load Balancer |

Is your Universal Messaging server behind a Reverse Proxy Server? | No, we do not use a Reverse Proxy Server |

Where is your application's HTML/JS served from? | It is served from a different web server (e.g. Apache) |

Suggested Session Driver Configuration:

var session = Nirvana.createSession({

// The WEBSOCKET driver is best not used if clients are behind

// traditional proxy servers.

// NOXD_IFRAME_STREAMING excluded as it won't work when

// hosting application HTML on a 3rd-party webserver.

drivers : [

Nirvana.Driver.XHR_STREAMING_CORS,

Nirvana.Driver.XDR_STREAMING,

Nirvana.Driver.IFRAME_STREAMING_POSTMESSAGE,

Nirvana.Driver.EVENTSOURCE_STREAMING_POSTMESSAGE,

Nirvana.Driver.XDR_LONGPOLL,

Nirvana.Driver.XHR_LONGPOLL_CORS,

Nirvana.Driver.XHR_LONGPOLL_POSTMESSAGE,

Nirvana.Driver.JSONP_LONGPOLL

]

});

Corresponding Server-Side Configuration:

Remember to include the hostname(s) of all servers hosting your application HTML in the CORS Allowed Origins field.

Ensure that you have configured a file plugin on the Universal Messaging interface to which JavaScript clients will connect, and that you have configured it to serve /lib/js/crossDomainProxy.html correctly.

Ensure that the Long Poll Idle Delay value is set to be lower than your Load Balancer or Reverse Proxy server's timeout value. This setting is in ms, and a good value is usually around 25000.

Ensure that the Long Poll Idle Delay value is set to be lower than any intermediate proxy server's timeout value. Many proxy servers have timeouts as low as 30 seconds (though an administrator may have lowered this, of course). In the Universal Messaging server, this setting is in ms, and a pragmatic value is usually around 25000. You may need to lower it further if external clients behind remote proxy servers fail to maintain sessions when using a longpolling driver.