Clusters with Sites

Sites - an exception to the Universal Messaging Cluster Quorum Rule (see Quorum). Although our recommended approach to deploying a cluster is a minimum of three locations and an odd number of nodes across the cluster, not all organizations have three physical locations with the required hardware. In terms of BCP (Business Continuity Planning), or DR (Disaster Recovery), organizations may follow a standard approach with just two locations: a primary and backup:

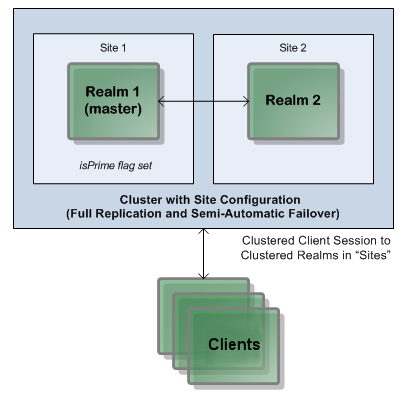

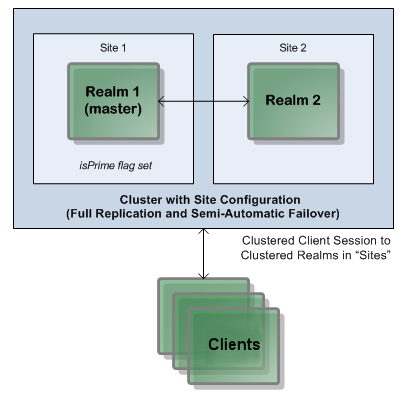

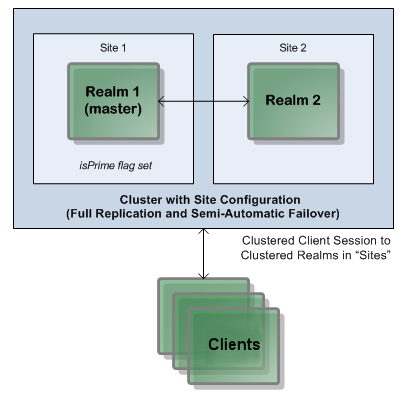

Two-realm cluster over two sites, using Universal Messaging Clusters with Sites.

Two-realm cluster over two sites, using Universal Messaging Clusters with Sites.With only two physical sites available, the Quorum rule of 51% or more of cluster nodes being available is not reliably achievable, either with an odd or even number of realms split across these sites. For example, if you deploy a two-realm cluster, and locate one realm in each available location, then as soon as either location is lost, the entire cluster cannot function because of the 51% quorum rule:

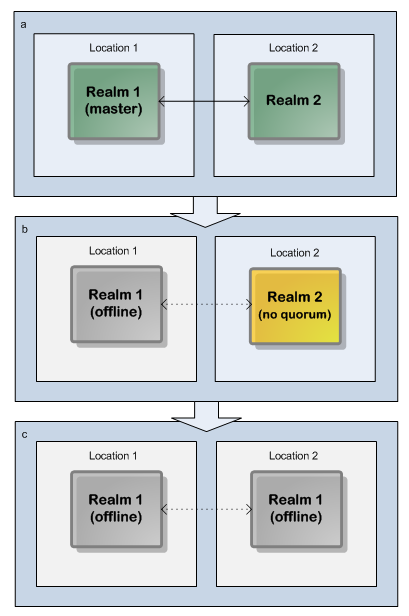

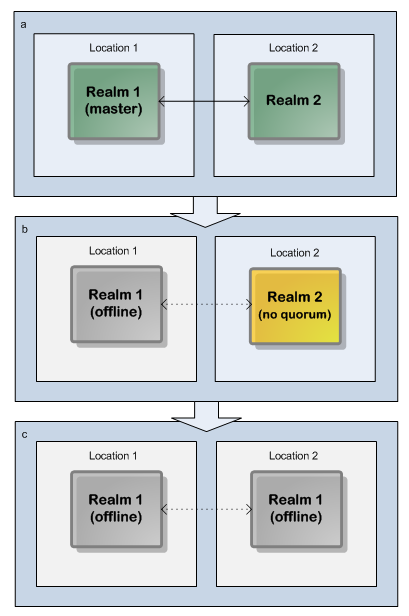

Two-realm cluster over two locations: a 51% quorum is unachievable if one location/realm fails.

Two-realm cluster over two locations: a 51% quorum is unachievable if one location/realm fails.Note: Dotted lines represent interrupted communication owing to server or network outages.

Similarly, if you deployed a three-node cluster with one realm in Location 1 and two in Location 2, and then lost access to Location 1, the cluster would still be available; if, however, you lost Location 2, the cluster would not be available since only 33% of the cluster's realms would be available.

This problem has been addressed through the introduction of Universal Messaging Clusters with Sites. The basic concept of Sites is that if only two physical locations are available, and Universal Messaging Clustering is used to provide High Availability and DR, it should be possible for one of those sites to function with less than 51% of the cluster available. This is achieved by allowing an additional vote to be allocated to either of the physical locations in order to achieve cluster quorum.

Example: Achieving Quorum using Universal Messaging Clusters with Sites

Consider an example scenario where there are two physical locations: the default production site, and a disaster recovery site.

We can deploy a cluster of two realms: one in the production site and one in the DR site. Normally this configuration wouldn't be able to satisfy the 51% quorum rule in the event of the loss of one location/realm.. However, within the Universal Messaging Admin API, and specifically within a cluster node, it is now possible to define individual Site objects and allocate each realm within the cluster to one of these physical sites. Each defined site contains a list of its members, and a flag to indicate whether the site as a whole can cast an additional vote. This flag is known as the isPrime flag.

We can thus define two sites in our cluster:

production site

disaster recovery site with

isPrime flag set

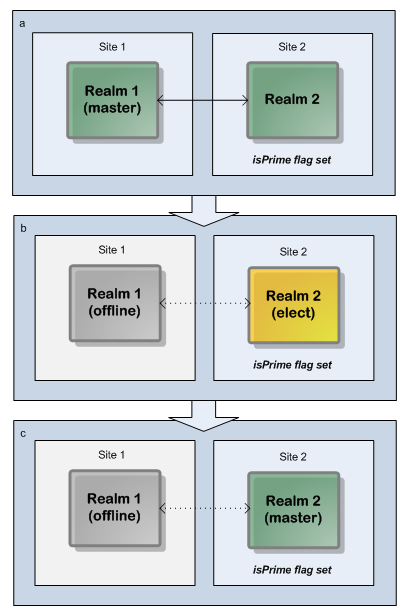

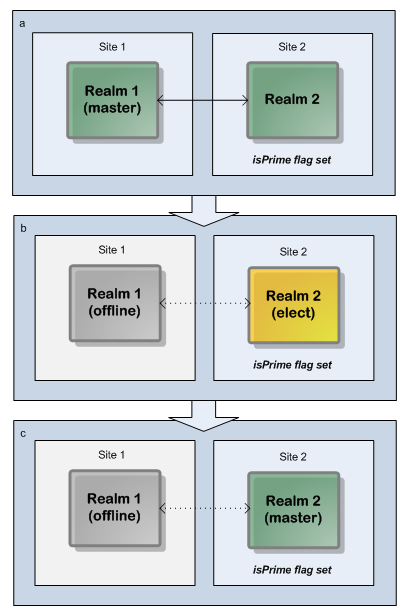

In a disaster recovery situation, where the production site is lost, the DR site will achieve quorum with only one of the two nodes available because the isPrime flag provides an additional vote for the DR site:

Two-realm cluster over two sites: Sites make a 51% quorum achievable if one location/realm fails.

Two-realm cluster over two sites: Sites make a 51% quorum achievable if one location/realm fails.Note: Dotted lines represent interrupted communication owing to server or network outages.

If, on the other hand, the disaster recovery site (which has the isPrime flag set) is lost, then manual intervention would be required to set the isPrime flag on the production site instead, so that the production site alone can achieve quorum and the cluster can still operate.

Note that this example uses only one realm per site for simplicity. The same technique can be used for sites with as many realms as required.

Setting the isPrime flag can be achieved programmatically using the Universal Messaging Admin API or using the Enterprise Manager tool. In these situations it is always advisable to discover the cause of the outage so any changes to configuration are made with the relevant facts at hand.

Two-realm cluster over two sites, using Universal Messaging Clusters with Sites.

Two-realm cluster over two sites, using Universal Messaging Clusters with Sites. Two-realm cluster over two sites, using Universal Messaging Clusters with Sites.

Two-realm cluster over two sites, using Universal Messaging Clusters with Sites. Two-realm cluster over two locations: a 51% quorum is unachievable if one location/realm fails.

Two-realm cluster over two locations: a 51% quorum is unachievable if one location/realm fails. Two-realm cluster over two sites: Sites make a 51% quorum achievable if one location/realm fails.

Two-realm cluster over two sites: Sites make a 51% quorum achievable if one location/realm fails.