Out-of-Memory Protection

Universal Messaging provides methods that you can use to protect from out-of-memory situations. It is important to note that a subset of these methods may impact performance; those which do so are disabled by default.

Metric Management

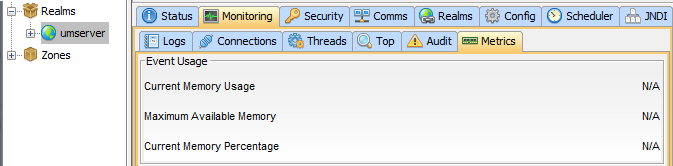

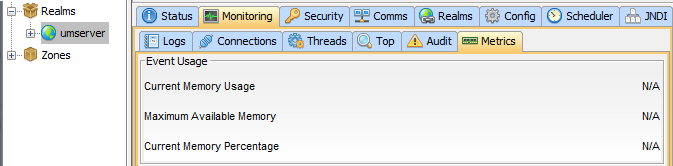

Universal Messaging provides metrics pertaining to memory usage on a realm server. When you select the Monitoring tab in the Enterprise Manager, these metrics can be found under the Metrics tab, as shown below:

Metrics can be enabled/disabled on a realm server through the realm configuration. Setting the configuration option EnableMetrics controls the enabling/disabling of all metrics; each metric can also be enabled/disabled individually.

Event Usage

The event usage metric provides information on memory currently in use by on-heap events. The three statistics provided are as follows:

1. Current Memory Usage - the memory currently in use by on-heap events.

2. Maximum Available Memory - the maximum memory currently available to the JVM.

3. Current Memory Percentage - the percentage of the maximum memory available to the JVM currently occupied by on-heap events.

Event usage monitoring can be enabled/disabled through the configuration option EnableEventMemoryMonitoring. Enabling this metric may impact performance, and so it is disabled by default. Disabling this metric will cause "N/A" to be displayed for each of the three statistics described previously, as shown in the screenshot above.

Flow Control

Universal Messaging provides the ability to hold producing connections (publishes, transactional publishes, batch publishes, etc.) before processing their events. This is to allow consumers to reduce the number of events on connections before additional events are published, thus decreasing memory usage.

Flow control can be enabled/disabled and configured on a realm server through the realm configuration. Setting the configuration option EnableFlowControl controls the enabling/disabling of flow control; flow control is disabled by default. There are also three other configurable options:

FlowControlWaitTimeThree - the first level of waiting, and shortest wait time; activated at 70% memory usage

FlowControlWaitTimeTwo - the second level of waiting; activated at 80% memory usage

FlowControlWaitTimeOne - the final level of waiting, and longest wait time; activated at 90% memory usage

Each of these options can be configured to suit the level of out-of-memory protection required. They represent the amount of time, in milliseconds, for which producer connections are throttled upon reaching the associated percentage of memory usage. In general, as memory usage gets closer to 100%, the need for throttling will be greater, so you would normally set FlowControlWaitTimeOne to a higher value than FlowControlWaitTimeTwo, which in turn will be higher than FlowControlWaitTimeThree.

Example: Assume a current memory usage of 90%. If there is a new publish request, the connection from which the publish originates will be throttled for FlowControlWaitTimeOne milliseconds, ensuring the event(s) will not be published sooner than FlowControlWaitTimeOne milliseconds from the time of publish.

Transactional Publishes

It is important to note that when using transactional publishes, by default the timeouts are shorter than standard publishes. This means that even if a client times out, the event may still be processed if the session has not been disconnected by the keep alive. As such, when using transactional publishes, it is best practice to call publish just once before committing. Every consecutive publish will be throttled, potentially leading to a timeout during the commit, and despite this timeout, the commit may still be successful.