This document covers the following topics:

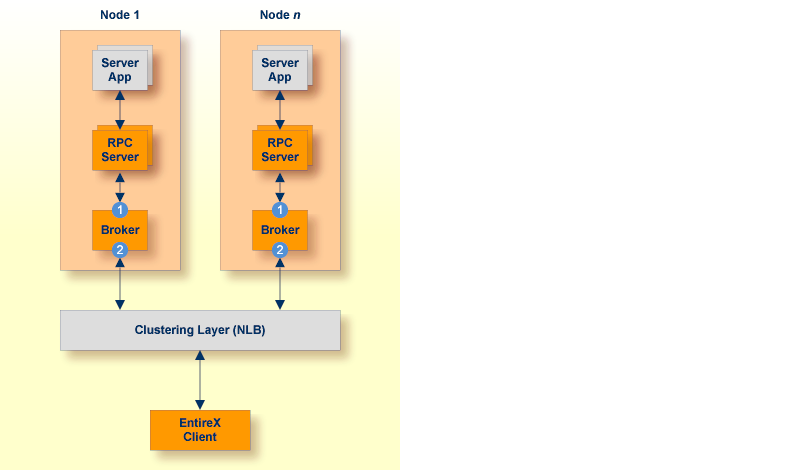

Segmenting dynamic workload from static server and management topology is critically important. Using broker TCP/IP-specific attributes, define two separate connection points:

One for RPC server-to-broker and admin connections.

The second for client workload connections.

See TCP/IP-specific Attributes. Sample attribute file settings:

| Sample Attribute File Settings | Note | |

|---|---|---|

|

PORT=1972 |

In this example, the HOST is not defined, so the default setting will be used (localhost).

|

|

HOST=10.20.74.103 (or DNS) PORT=1811 |

In this example, the HOST stack is the virtual IP address. The PORT will be shared by other brokers in the cluster.

|

We recommend the following:

Share configurations - you will want to consolidate as many configuration parameters as possible in the attribute setting. Keep separate yet similar attribute files.

Isolate workload listeners from management listeners.

In addition to broker redundancy, you also need to configure your RPC servers for redundant operations. We recommend the following best practices when setting up your RPC servers:

Make sure your definitions for CLASS/SERVER/SERVICE are identical

across the clustered brokers. Using identical service names will allow the

broker to round-robin messages to each of the connected RPC server instances.

For troubleshooting purposes, and if your site allows this, you can optionally use a different user ID for each RPC server.

RPC servers are typically monitored using Command Central as services of a broker.

Establish the broker connection using the static Broker name:port definition.

Maintain separate parameter files for each Natural RPC Server instance.

Monitor brokers through Command Central.

An important aspect of high availability is during planned maintenance events such as lifecycle management, applying software fixes, or modifying the number of runtime instances in the cluster. Using a virtual IP networking approach for broker clustering allows high availability to the overall working system while applying these tasks.

Broker administrators, notably on UNIX and Windows systems, have the need to start, ping (for Broker alive check) and stop Broker as well as RPC servers from a system command-line, prompt or from within batch or shell scripts. To control and manage the lifecycle of brokers, the following commands are available with Command Central:

sagcc exec lifecycle start local EntireXCore-EntireX-Broker-<broker-id>

sagcc get monitoring state local EntireXCore-EntireX-Broker-<broker-id>

sagcc exec lifecycle stop local EntireXCore-EntireX-Broker-<broker-id>

sagcc exec lifecycle restart local EntireXCore-EntireX-Broker-<broker-id>

To start an RPC server

To start an RPC server

See Starting the RPC Server for C | .NET | Java | XML/SOAP | IMS Connect | CICS ECI | AS/400 | IBM® MQ.

To ping an RPC server

To ping an RPC server

Use the following Information Service command:

etbinfo -b <broker-id> -d SERVICE -c <class> -n <server name> -s <service> --pingrpc

To stop an RPC server

To stop an RPC server