This document covers the following topics:

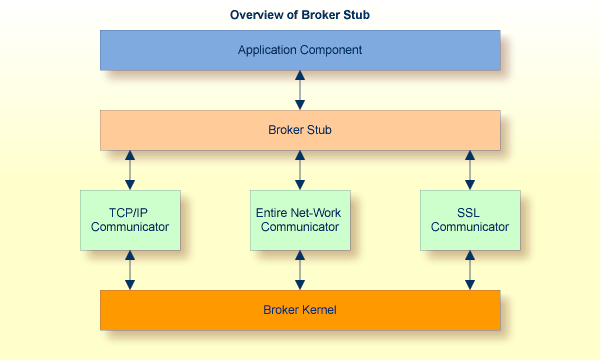

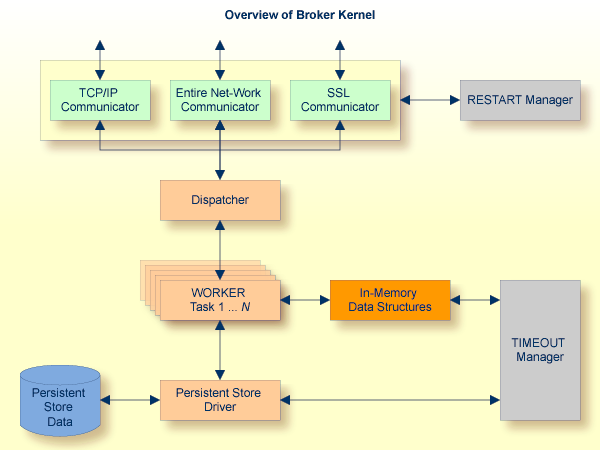

This section describes the command process flows within the Broker kernel and stubs when two application components communicate with each other using EntireX Broker. The Broker consists of the following components:

a stub (application binding), which resides within the process space of each application component

a Broker kernel, which resides in a separate process space, managing all the communication between application components

The details of the transport protocols remain transparent to the application components because they reside within EntireX Broker (stubs and kernel). The EntireX Broker kernel and the location of the transport protocols are the architectural aspects of EntireX Broker that distinguish it from other messaging middleware.

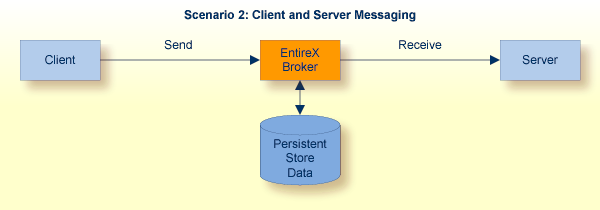

The EntireX Broker uses the communication model client and server. See Writing Client and Server Applications for details.

This is a synchronous messaging scenario: send request and wait for a response.

This is an asynchronous messaging scenario: put message in service queue.

Note:

Client and server have specific meanings within the context of

EntireX.

| Term | Description |

|---|---|

| Client |

An application component intending to access a service makes its request via EntireX Broker which routes the request to the specific application component offering this service. The request can be a single pair of messages comprising request/reply; or it can be a sequence of multiple, related messages containing one or more requests and one or more replies, known as a conversation. This enables EntireX Broker to be used for applications supporting different programming interfaces. It also allows interoperability between types of application components employing these different interfaces. |

| Server |

An application component offering a service registers it with EntireX Broker. EntireX Broker makes the registered service available to other application components capable of communicating with EntireX Broker. The fact that a server has been registered and is available in this way defines it as a service in terms of class/name/server within the context of EntireX. |

The type of communication model described in this section and in the section Architecture of Broker Kernel is client and server.

The EntireX Broker stub is another name for Software AG's ACI (Advanced Communication Interface). The stub implements an API (application programming interface) that allows programs written in various languages to access EntireX Broker.

See also Administering Broker Stubs under z/OS | UNIX | Windows | BS2000 | z/VSE | IBM i.

The following table gives a step-by-step description of a typical

command process flow from and to a Broker stub. This example describes a

SEND/RECEIVE command pair.

| Step | Description |

|---|---|

| 1 | The originating application program calls the stub with a

SEND/WAIT=YES command. The stub builds the necessary

information structures and communicates the message to the Broker kernel. Basic

validation is performed in the stub before the command is passed to the Broker

kernel.

|

| 2 | The stub uses one of the following transport mechanisms to transmit the command to the Broker kernel: TCP, SSL or Entire Net-Work. The application does not have to recognize the details of the transport protocol since all transport protocol processing resides entirely within the stub. |

| 3 | The application is suspended while the stub waits for a

response. Since the application has issued

SEND,

WAIT=YES it must wait for the message to travel via the Broker

kernel to the partner application which will satisfy the request.

|

| 4 | After the request has been satisfied and the message returns from the partner application, via the Broker kernel, the stub will pass control back to the originating application. |

The type of communication model described in this section and in the section Architecture of Broker Stub is client and server.

The following table gives a step-by-step description of a typical

command process flow within the Broker kernel. This example describes a

SEND/RECEIVE command pair.

| Step | Description |

|---|---|

| 1 | The originating application program calls the Broker stub with

a SEND

command. The stub builds the necessary information structures and transmits the

message to the Broker kernel using TCP, SSL or Entire Net-Work.

|

| 2 | The message is received by one of the communications subtasks running within the Broker kernel. The communications subtask passes the message to the dispatcher. |

| 3 | The dispatcher schedules the processing of the message within a worker task inside the Broker kernel. |

| 4 | Worker task processes the inbound message, performing any necessary character conversion and security operations, and then determines the partner to which the message is to be routed. Any necessary persistence operations are performed under control of the worker task. |

| 5 | The outbound message is passed to the relevant communications subtasks within the Broker kernel for transmission to the partner application component. |

| 6 | The partner application component which has issued a

RECEIVE

command via the broker stub obtains the message from the originating

application program.

|

| 7 | The partner application component then processes the message and normally makes a reply. |

Notes: