Rate limit your APIs

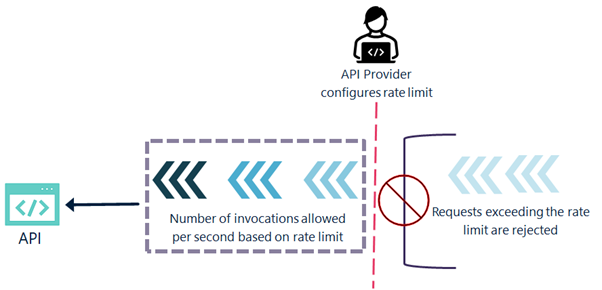

API rate limiting is a technique that is used to limit the number of invocations made to an API during the specified time interval. Limiting the number of invocations prevents overloading the API and in turn improves its performance.

Using the Traffic Optimization policy in API Gateway, you can limit the number of API invocations during a specified time interval. When the number of invocations exceeds the configured limit, API Gateway sends alerts to a specified destination.

Rate limiting algorithm in API Gateway

API Gateway uses the Token Bucket algorithm for API rate limiting to control request flow. API Gateway maintains a bucket of tokens for each API and rate limit policy definition. The bucket holds tokens up to a configured threshold, which determines the maximum number of requests allowed within a given time frame. For each incoming request, a token is removed from the bucket. If there are sufficient tokens, the request is processed. Conversely, if the bucket is empty and no tokens are available, the request is rejected.

The API starts the time interval for token replenishment when it becomes active. For example, if the API allows 10,000 requests per hour starting at 4:30 PM, the interval lasts until 5:30 PM. After this interval, the API refills the bucket with the predefined number of tokens, and a new interval begins.

Clustered Environment and Token Buckets

In a clustered environment, each node maintains its own bucket of tokens and must be synchronized. To improve performance and efficiency in clustered environments, prefetch tokens are used. Prefetched tokens are replenished more efficiently, reducing the need for frequent synchronization with the central bucket. This ensures that each cluster node can handle requests more smoothly, improving the overall performance.

For example, in a cluster configured with three nodes and a prefetch setting of 10 tokens, each node can fetch and store 10 tokens locally. When a request arrives, it consumes a token from the local prefetch cache. If the local cache is depleted, the node requests more tokens from the central bucket.

Prefetch tokens offer benefits such as reduced latency, as fetching tokens from the central bucket is minimized. However, there are limitations, including potential token wastage if a node fetches tokens but does not receive enough requests to use them. These unused tokens cannot be shared with other nodes until the local cache is empty.

The Traffic optimization policy generates two types of events when the specified limit is breached:

Policy violation event

Policy violation event. Indicates the violations that occur for an API. If there are 100 violations, then 100 policy violation events are generated.

Monitor event

Monitor event. Controlled by the alert frequency configuration specified in the policy.

The following illustration explains how the configured rate limit restricts the API invocation.

Why and when do you configure rate limiting?

API providers configure rate limit to:

Prevent resource abuse

Prevent resource abuse. There could be cases in which a single consumer performs an unexpected number of invocations to an API. This overloads the system and affects API's performance. Hence, as an API provider, you can configure a rate limit to prevent such a usage.

Manage traffic

Manage traffic. As an API provider, you provide certain SLAs to your consumers and if you have a large consumer base for your APIs then it is vital to control the traffic rate of your APIs. You can use rate limiting to manage traffic to an API, ensuring that it is highly available and responsive when handling requests from many consumers.

Controlling resource usage

Controlling resource usage. Resources cost money. The number of API invocations is directly proportional to the consumption of resources such as hosting provider, third-party agents and so on. By setting rate limits, you can control the number of resources that are used by each client.

Protect from malicious activities

Protect from malicious activities. Restricting requests over a period also helps to minimise the risk of attackers and protects your resources from malicious activities.

Rate limiting considerations

You can configure rate limit in conjunction with other API policies such as

Identify & Authorize policy,

Traffic management policy and so on.

For the proper usage of the rate limit, you can configure for protection and the quota for monetization, you must understand the fundamental difference between both:

Rate limit | Quota |

Specifies the number of requests that can be made to an API over a relatively shorter period such as second or minute. | Specifies the number of requests that a consumer can make to an API over a longer period, such as per day, per week, or per month. |

Useful for managing traffic and preventing overload of an API in real-time. | Useful for controlling the usage over a longer period and ensuring fair use of API resources. |