How Much Will an Application Speed up with Caching?

In applications that are I/O bound, which is most business applications, most of the response time is getting data from a database. In a system where each piece of data is used only one time, there is no benefit. In a system where a high proportion of the data is reused, the speed up is significant.

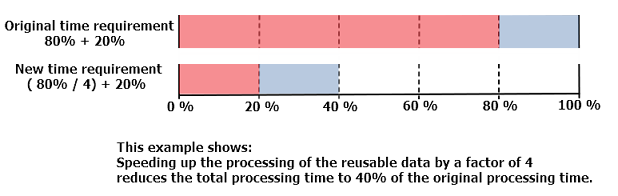

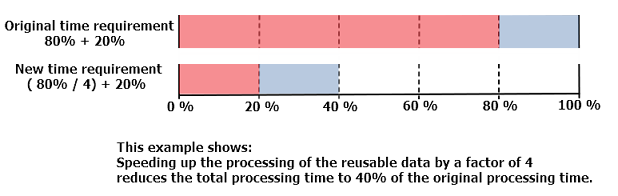

Consider, for example, an application that takes 80% of its processing time processing reusable data and 20% processing data that is not reusable. If the processing of reusable data can be sped up by a factor of 4, the original 80% processing time will reduce to 20% of the original time.

The example above is generalized in Amdahl's law, which is a mathematical formula to calculate how much a total system speeds up compared to the original speed, if just a part of the system is speeded up:

Total speedup =

1 / ((1 - Proportion Sped Up) + (Proportion Sped Up / Proportional Speedup))

where:

"Total speedup" is a number that indicates how much faster a system is compared to the original system. For example, "2.5" means that the new system is 2.5 times faster than the original system.

"Proportion sped up" is the percentage of the total system being sped up, indicated by a value between 0 and 1. For example, if the proportion being sped up is 80% of the original total system, this is indicated by 0.8.

"Proportional Speedup" is a number that indicates how much faster the part being sped up is compared to the corresponding part of the original system. For example, "4" means that the part being sped up is 4 times faster than in the original system.

The following examples show how to apply Amdahl’s law to common situations. In the interests of simplicity, we assume:

A single server.

A system with a single thing in it, which when cached, gets 100% cache hits and lives forever.

Persistent Object Relational Caching

A Hibernate Session.load() for a single object is about 1000 times faster from cache than from a database.

A typical Hibernate query will return a list of IDs from the database, and then attempt to load the objects matching the IDs. If Session.iterate() is used, Hibernate goes back to the database to load each object. Imagine a scenario where we execute a query against the database that returns a hundred IDs and then loads each one. Let us assume that executing the initial query to return the hundred IDs takes 20% of the time, and the round trip loading of the database objects takes the rest (80%). The round trip loading for each object includes an additional database query part to access an object on the basis of the ID returned in the initial query. Assuming that the additional database query takes 75% of the time that the round trip loading takes, the proportion being sped up is thus 60% (calculated from 75% * 80%).

The expected total system speedup is thus:

1 / ((1 - .6) + .6 / 1000)

= 1 / (.4 + .0006)

= 2.5 times system speedup

Web Page Caching

An observed speed up from caching a web page is 1000 times. Ehcache can retrieve a page from its SimplePageCachingFilter in a few milliseconds.

Because the web page is the result of a computation, it has a proportion of 100%.

The expected system speedup is thus:

1 / ((1 - 1) + 1 / 1000)

= 1 / (0 + .0001)

= 1000 times system speedup

Web Page Fragment Caching

Caching the entire page is a big win. Sometimes the liveliness requirements vary in different parts of the page. Here the SimplePageFragmentCachingFilter can be used.

Let's say we have a 1000 fold improvement on a page fragment that takes 40% of the page render time.

The expected system speedup is thus:

1 / ((1 - .4) + .4 / 1000)

= 1 / (.6 + .0004)

= 1.6 times system speedup

Cache Efficiency

Cache entries do not live forever. Some examples that come close are:

Static web pages or web page fragments, like page footers.

Database reference data, such as the currencies in the world.

Factors that affect the efficiency of a cache are:

Liveliness

Liveliness — How live the data needs to be. High liveliness means that the data can change frequently, so the value in cache will soon be out of date; this scenario can negatively affect the performance of an application using the cache because of the caching overhead. Low liveliness means that the data changes only rarely, so the value in cache will often match the current real value of the non-cached data. In general, the less live an item of data is, the more suitable it is for caching.

Proportion of data cached

Proportion of data cached — What proportion of the data can fit into the resource limits of the machine.

Garbage collection issues can make it impractical to have a large Java heap.

Shape of the usage distribution

Shape of the usage distribution — If only 300 out of 3000 entries can be cached, but the Pareto (80/20 rule) distribution applies, it might be that 80% of the time, those 300 will be the ones requested. This drives up the average request lifespan.

Read/Write ratio

Read/Write ratio — The proportion of times data is read compared with how often it is written. Things such as the number of empty rooms in a hotel change often, and will be written to frequently. However the details of a room, such as number of beds, are immutable, and therefore a maximum write of 1 might have thousands of reads.

Cluster Efficiency

Assume a round-robin load balancer where each hit goes to the next server. The cache has one entry which has a variable lifespan of requests, say caused by a time to live (TTL) setting. The following table shows how that lifespan can affect hits and misses.

Server 1 Server 2 Server 3 Server 4

M M M M

H H H H

H H H H

H H H H

H H H H

... ... ... ...

The cache hit ratios for the system as a whole are as follows:

Entry

Lifespan Hit Ratio Hit Ratio Hit Ratio Hit Ratio

in Hits 1 Server 2 Servers 3 Servers 4 Servers

2 1/2 0/2 0/2 0/2

4 3/4 2/4 1/4 0/4

10 9/10 8/10 7/10 6/10

20 19/20 18/20 17/20 16/10

50 49/50 48/50 47/20 46/50

The efficiency of a cluster of standalone caches is generally:

(Lifespan in requests - Number of Standalone Caches) / Lifespan in requests

Where the lifespan is large relative to the number of standalone caches, cache efficiency is not much affected. However when the lifespan is short, cache efficiency is dramatically affected.

A Cache Version of Amdahl's Law

Applying Amdahl's law to caching, we now have:

1 / ((1 - Proportion Sped Up * effective cache efficiency) +

(Proportion Sped Up * effective cache efficiency)/ Speed up)

where:

effective cache efficiency = (cache efficiency) * (cluster efficiency)

Web Page Example

Applying this formula to the earlier web page cache example where we have cache efficiency of 35% and average request lifespan of 10 requests and two servers:

cache efficiency = .35

cluster efficiency = .(10 - 1) / 10

= .9

effective cache efficiency = .35 * .9

= .315

system speedup:

1 / ((1 - 1 * .315) + 1 * .315 / 1000)

= 1 / (.685 + .000315)

= 1.45 times system speedup

If the cache efficiency is 70% (two servers):

cache efficiency = .70

cluster efficiency = .(10 - 1) / 10

= .9

effective cache efficiency = .70 * .9

= .63

system speedup:

1 / ((1 - 1 * .63) + 1 * .63 / 1000)

= 1 / (.37 + .00063)

= 2.69 times system speedup

If the cache efficiency is 90% (two servers):

cache efficiency = .90

cluster efficiency = .(10 - 1) / 10

= .9

effective cache efficiency = .9 * .9

= .81

system speedup:

1 / ((1 - 1 * .81) + 1 * .81 / 1000)

= 1 / (.19 + .00081)

= 5.24 times system speedup

The benefit is dramatic because Amdahl’s law is most sensitive to the proportion of the system that is sped up.