This document describes various aspects of Event Replicator for Adabas processing. It covers the following topics:

Cross-Checking Subscription Definitions and Actual Replication

Reducing the Risk of Event Replicator Server Replication Pool Overflows

During normal processing, the following phases of processing occur:

| Phase | Processing Description |

|---|---|

| Collection | In the Adabas nucleus, when an update occurs to a replicated file in an Adabas database, the compressed information related to the update is collected and stored in the Adabas nucleus replication pool. Once the update has been fully processed and committed by Adabas (the protection information is stored properly in the Work data set and the protection log), all of the updates in a transaction are queued to be sent to the Event Replicator Server. |

| Transfer | The compressed replication transaction in the Adabas replication pool is sent to the Event Replicator Server. |

| Input | The replication transaction is received by the Event Replicator Server and stored in the Event Replicator Server replication pool. At this point, the replication data exists in both the Adabas and Event Replicator Server replication pools. When the entire transaction is received, it is queued for the Assignment phase. |

| Assignment | The replication transaction is queued for processing by zero or more subscriptions. |

| Subscription | The replication transaction is processed by the subscriptions to which it has been assigned. During this phase, the compressed replication transaction is adjusted using the specifications in the subscription to create a decompressed replication transaction. It is also assigned to zero or more destinations by the subscription. |

| Output | The decompressed replication transaction

is processed by each destination to which it was assigned. Each destination is

processed in one of the following ways:

|

| Completion | On the Event Replicator Server, the Adabas nucleus from which the replication transaction originated is notified that replication for the transaction is completed and the transaction is removed from the Event Replicator Server replication pool. When the Adabas nucleus receives the completion notification from the Event Replicator Server, all data for the complete transaction is removed from the Adabas nucleus replication pool. |

Once the Event Replicator for Adabas in installed, its replication processing is driven by definitions you specify. These definitions are described in the following table in order of importance to replication (required definitions are listed first).

Note:

You can run Event Replicator for Adabas in verify (test) mode, by turning on verification in

the VERIFYMODE replication definition. This is useful if you want to test the

definitions you have specified before you start using Event Replicator for Adabas in production mode.

For more information, read Running in Verify

Mode.

| Definition Type | Defines | How many definitions are required? |

|---|---|---|

| destination | The destination of the replicated data.

Destination definitions can be created for Adabas, File,

webMethods EntireX, WebSphere MQ, and Null destinations.

To maintain destination definitions using DDKARTE statements of the Event Replicator Server startup job, read Destination Parameter. To maintain destination definitions using the Adabas Event Replicator Subsystem, read Maintaining Destination Definitions. |

Required.

At least one destination definition is required for data replication to occur. Create one definition for every Event Replicator for Adabas destination you intend to use. |

| subscription | A set of specifications to be applied to

the replication of the data. These include (but are not limited to):

Subscription definitions identify SFILE definitions and resend buffer definitions that should be used. At least one SFILE definition is required. To maintain subscription definitions using DDKARTE statements of the Event Replicator Server startup job, read SUBSCRIPTION Parameter. To maintain subscription definitions using the Adabas Event Replicator Subsystem, read Maintaining Subscription Definitions. |

Required.

At least one subscription definition is required for data replication to occur. |

| SFILE | An Adabas file to be replicated and the

replication processing that should occur for that file. SFILE definitions are

sometimes referred to as subscription file definitions and are

referenced by subscription definitions.

An SFILE definition identifies (among other things):

To maintain SFILE definitions using DDKARTE statements of the Event Replicator Server startup job, read SUBSCRIPTION Parameter. To maintain SFILE definitions using the Adabas Event Replicator Subsystem, read Maintaining SFILE Definitions. |

Required.

At least one SFILE definition is required for data replication to occur. |

| initial-state | An initial-state request for data from

the target application. Initial-state definitions identify the subscription,

destination, and specific Adabas files to use in an Event Replicator for Adabas initial-state run.

To maintain initial-state definitions using DDKARTE statements of the Event Replicator Server startup job, read INITIALSTATE Parameter. To maintain initial-state definitions using the Adabas Event Replicator Subsystem, read Maintaining Initial-State Definitions. |

Not required.

If you want initial-state data produced in an Event Replicator for Adabas run, only one initial-state definition is required. Otherwise, no initial-state data definition is required. |

| IQUEUE | The input queue on which Event Replicator for Adabas should

listen for requests from webMethods EntireX and WebSphere MQ targets.

To maintain IQUEUE definitions using DDKARTE statements of the Event Replicator Server startup job, read IQUEUE Parameter. To maintain IQUEUE definitions using the Adabas Event Replicator Subsystem, read Maintaining Input Queue (IQUEUE) Definitions. |

Not required.

At least one IQUEUE definition is required for every EntireX Communicator or WebSphere MQ target you intend to use. If webMethods EntireX or WebSphere MQ are not used, no IQUEUE definition is required. |

| GFB |

A global format buffer (GFB) definition stored separately for use in SFILE definitions. You can specify GFBs manually or generate them using Predict file definitions. When you generate them, a field table is also generated. While a format buffer specification is required in a subscription's SFILE definition, a stored GFB definition does not need to be used. The SFILE definition could simply include the format buffer specifications it needs. To maintain GFB definitions using DDKARTE statements of the Event Replicator Server startup job, read GFORMAT Parameter. To maintain GFB definitions using the Adabas Event Replicator Subsystem, read Maintaining GFB Definitions. |

Not required.

No GFB definition is required. If a global format buffer is needed, at least one GFB definition is required. |

| resend buffer | A resend buffer that can be used by any

subscription to expedite the retransmission of a transaction.

To maintain resend buffer definitions using DDKARTE statements of the Event Replicator Server startup job, read RESENDBUFFER Parameter. To maintain resend buffer definitions using the Adabas Event Replicator Subsystem, read Maintaining Resend Buffer Definitions. |

Not required.

No resend buffer definition is required. If you elect to retransmit a transaction, at least one resend buffer definition is required. |

| transaction filter | A filter definition that can be used to

filter the records used for replication based on the values of fields in those

records.

To maintain transaction filter definitions using DDKARTE statements of the Event Replicator Server startup job, read FILTER Parameter. To maintain transaction filter definitions using the Adabas Event Replicator Subsystem, read Maintaining Transaction Filter Definitions. |

Not required.

No transaction filter definition is required. If you want to use a transaction filter to filter records used in replication, at least one transaction filter definition is required. |

During session start, the Adabas nucleus performs the following tasks:

Allocates memory needed by the replication-related code.

Determines which files need to be replicated.

Establishes contact with each Event Replicator target ID associated with files to be replicated.

Once the Adabas nucleus is started, the following replication processing occurs during the collection phase:

For each user, the Adabas nucleus tracks and places information in the Adabas replication pool related to modifications to each record in each file selected for replication. To track modifications, the Event Replicator for Adabas captures the before and after images (compressed) of all modified data.

The nucleus accumulates replication data for an entire user transaction, as follows:

A primary key may or may not be associated with a replicated file. If a primary key is not associated with a replicated file, any before image associated with a record is the before image from data storage. If a primary key is associated with a replicated file and the key is modified, any before image associated with a record is only the compressed value of the primary key. The primary key can be specified using the ADALOD LOAD RPLKEY parameter.

Data for replication is collected at the time protection records are created for modifications to records within a replicated file. The following data is collected during a transaction that modifies records on one or more replicated files:

Data storage after image.

Data storage before image during a delete.

Data storage before image during an update (optionally, if this feature is activated for the file).

For further information, see the ADALOD LOAD RPLDSBI parameter documentation and the ADADBS REPLICATION DSBI parameter documentation. Discussions of Adabas utility functions specific to Event Replicator for Adabas can be found in Utilities Used with Replication.

Primary key before image during an update or delete, when the optional primary key has been defined for the file and the key has been updated or deleted from the record.

If a transaction is backed out, the nucleus discards all replication data collected for the transaction. No replication-related information is collected during an update command when no data is modified during the update command.

If any record is modified more than once during a transaction, the nucleus makes available to the outside destination only the final instance of the modification of the record. This is done by consolidating modifications to the same record within the same user transaction, as follows:

For the sake of performance, no data consolidation occurs at the point the modification related data is collected.

Data consolidation occurs in the nucleus address space.

Data consolidation occurs after a transaction is committed on WORK.

The following rules apply to the consolidation of modifications to the same record during one transaction:

An insert followed by an update is treated as an insert.

An update followed by another update is treated as one update.

An update followed by a delete is treated as one delete.

When a before image exists for a primary key and a before image exists from data storage, the before image for the primary key is used.

The first before image captured is used.

Note:

This rule is subject to the above rule regarding a

before image of a primary key versus the before image from data storage.

The last after image captured is used.

There is an exception to these data consolidation rules: a delete followed by an insert to the same record will be treated as two separate modifications.

Important:

The setting of the RPLSORT parameter can affect how

modifications are consolidated. When RPLSORT=YES is specified, modifications

are consolidated as described in this section. When RPLSORT=NO is specified,

modifications are still consolidated, but their referential integrity is

maintained. In other words, the chronological order of the updates is

maintained for each ISN in a file. For more information, read

RPLSORT

Parameter.

The nucleus notifies the Event Replicator Server when a transaction with replicated data has been committed.

The nucleus makes the replication data available to the Event Replicator Server.

When the Adabas nucleus receives the completion notification from the Event Replicator Server during the completion phase, all data for the complete transaction is removed from the Adabas nucleus replication pool.

Notes:

For information on the Adabas nucleus settings, see Adabas Initialization (ADARUN) Parameters and Utilities Used with Replication .

The following description summarizes the processing performed by the Event Replicator Server:

During Event Replicator Server startup, the Event Replicator Server establishes contact with Adabas nuclei for the related database IDs. If an Adabas nucleus is not yet active, the nucleus contacts the Event Replicator Server during nucleus initialization.

During the input, assignment, and subscription phases, the Event Replicator Server processes the received modified data according to the subscriptions defined in the replication definition parameters.

After processing the data, the Event Replicator Server may apply user-customizable logic to the replication process (for example, filtering, conversion, or transformation).

During the output phase, the Event Replicator Server delivers the replicated data to the messaging system destination for replication to the target application.

Each replicated transaction delivered to a target is assigned a unique sequence number. This sequence number is generated for each unique subscription-destination combination of the replicated transaction. In other words, if the same replicated transaction is delivered to two different destinations by a subscription, that transaction may have two different sequence numbers (one for each destination).

Initial-state requests may be needed to resolve an ambiguous state incurred by the target application; the request can contain requests for a single record, a set of records, or an entire file.

During the completion phase, the Event Replicator Server notifies the appropriate Adabas nucleus that transaction replication is completed and then removes all information about the completed transaction from the Event Replicator Server replication pool.

An Event Replicator Server may process data from multiple databases. Replication data for one Adabas file must be processed by a single Event Replicator Server. No two Event Replicator Servers handle the same set of files from the same database.

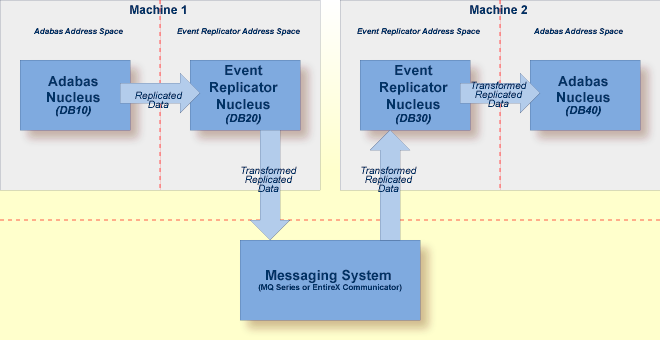

Event Replicator for Adabas provides node-to-node support, in which one Event Replicator Server can send replicated data to a second Event Replicator Server. The second Event Replicator Server can then, in turn, send the replicated data onto other destinations.

Consider the example depicted in the following picture:

The following processing occurs in this example:

Source Adabas database 10 sends its data to Event Replicator Server 20.

Event Replicator Server 20 sends the replicated data to an webMethods EntireX or WebSphere MQ destination.

Event Replicator Server 30 reads the replicated data from the webMethods EntireX or WebSphere MQ input queue.

Event Replicator Server 30 processes the replicated and sends it to Adabas database 40.

The following definitions must exist for this processing to occur correctly:

The definitions for Event Replicator Server 20 must include one or more subscriptions for Adabas database 10, which send the replicated data to a webMethods EntireX Broker or WebSphere MQ destination. The subscriptions may optionally also transform the replicated data.

The definitions for Event Replicator Server 30 must include webMethods EntireX or WebSphere MQ input queue definitions that match the webMethods EntireX or WebSphere MQ destination definitions in Event Replicator Server 20. The IQBUFLEN parameter value (input queue buffer length), specified in the input queue definitions for Event Replicator Server 30, must be greater than or equal to the length of the largest message sent by Event Replicator Server 20. The largest message sent by Event Replicator Server 20 is limited by the minimum of the following Event Replicator Server 20 settings: MAXOUTPUTSIZE parameter, DMAXOUTPUTSIZE parameter (if specified for the destination), or the message limit imposed by the messaging system.

Parameters IRMSGINTERVAL and IRMSGLIMIT control the number of input queue related messages printed. Consider the values set for these two parameters in Event Replicator Server 30.

The Event Replicator Server 30 definitions must also include at least one subscription for any data received from Adabas database 10 (in this case via the input queue). This subscription must send the replicated data to an Adabas destination definition for Adabas database 40.

The Event Replicator Server 30 definitions must also specify that, at startup,

Event Replicator Server 30 will not automatically initiate a connection with Adabas

database 10. In other words, the

DBCONNECT

parameter for database 10 must be set to

"NO" in Event Replicator Server 30.

ADARPD SUBSCRIPTION NAME=SRCEEMPL ... ADARPD SDESTINATION='OUTPUT1' ADARPD SFILE=4,SFDBID=610 ... ADARPD DESTINATION NAME='OUTPUT1' ADARPD DTYPE=ETBROKER ADARPD DETBBROKERID='DAEF:6020:TCP' ADARPD DETBSERVICECLASS=REPTOR ADARPD DETBSERVICENAME=FILE004 ADARPD DETBSERVICE=REPLICATE |

DEFAULTS = SERVICE CONV-LIMIT = UNLIM CONV-NONACT = 4M NOTIFY-EOC = YES SERVER-NONACT = 5M SHORT-BUFFER-LIMIT = UNLIM TRANSLATION = NO DEFEERED = YES CLASS = REPTOR, SERVER = *, SERVICE = * |

ADARPD SUBSCRIPTION NAME=SRCEEMPL ... ADARPD SDESTINATION='DADA1' ADARPD SFILE=4,SFDBID=610 ... ADARPD DESTINATION NAME=DADA1 ADARPD DTYPE=ADABAS ADARPD DAIFILE=4,DAIDBID=610,DATFILE=4,DATDBID=508 ADARPD IQUEUE NAME=INPUT1 ADARPD IQTYPE=ETBROKER ADARPD IQETBBROKERID='DAEF:6020:TCP' ADARPD IQETBSERVICECLASS=REPTOR ADARPD IQETBSERVICENAME=FILE004 ADARPD IQETBSERVICE=REPLICATE |

Normally, only changed data is replicated. However, this presumes that the target system has the same data that the source had before the change. If this is not the case, you need to get an initial version of the data to the target. This is accomplished using an initial-state request.

Initial-state requests must be supported by initial-state definitions. Each request must specify the name of the initial-state definition that should be used, as well as the database ID and file number to be processed. Initial-state definitions are specified in the Adabas Event Replicator Subsystem or by INITIALSTATE parameters in the Event Replicator Server startup job.

Initial-state requests are initiated either from the target application (client) to the Event Replicator Server or using the Adabas Event Replicator Subsystem. For information on how to submit an initial-state request from the target application to the Event Replicator Server, read Event Replicator Client Requests. For information on how to submit an initial-state request from the Adabas Event Replicator Subsystem, read Populating a Database With Initial-State Data.

Initial-state data can contain any subset of the data on the Adabas database, based on the specifications in the initial-state definition and parameters supplied in the initial-state request. Records can be selected for initial-state processing in one of the following manners:

The complete file can be selected.

Records are selected from the file based on an ISN list.

Records are selected from the file based on specified selection criteria.

Note:

Each replicated initial-state record contains the related data

storage after image. No before image is replicated for an initial-state record.

During initial-state processing, the nucleus reads the selected records and passes them to the Event Replicator Server. The Event Replicator decompresses the records depending on the subscription format and sends the data to the assigned output destinations.

For more information, read about maintaining initial-state definitions using Adabas Event Replicator Subsystem. For information about the DDKARTE statements required for initial-state definitions, read about the INITIALSTATE parameter .

Clients can send specific requests for data to the Event Replicator Server by sending messages to an Event Replicator input queue. The following requests can be made:

Status inquiries

Initial-state data requests

Prior-transaction (resend buffer) data requests.

For more information about submitting these requests to the Event Replicator Server from the target application, read Event Replicator Client Requests.

The Adabas C5 command can be used to message Event Replicator Server destinations from your application. C5 commands are transmitted via the messaging system. For more information, read C5 Command: Write User Data to Protection Log.

You can request that some utility functions performed against an Adabas database be replicated to another Adabas database. For example, if you change the field length of a field in an Adabas file, that change can be replicated to the target Adabas database. This eliminates the need for you to manually intervene to make the change in the target and eliminates the resulting errors if you do not.

| Warning: In order for this utility replication to work properly, you must ensure that your source and target files are maintained in identical manners. If a utility function is performed against the source file and replicated to a target file that cannot accommodate the utility request, errors will result and replication to the target will fail. |

This section covers the following topics:

The following limitations exist in utility replication:

Utility functions cannot be replicated from an Adabas database to a target relational database (RDBMS) via the Event Replicator Target Adapter. If you need to refresh or drop tables in your RDBMS based on utility function activity performed against your Adabas database, you must use specific Adabas Event Replicator Subsystem functions once the Adabas utility has completed processing. For more information, read Submitting Event Replicator Target Adapter Requests (Adabas Event Replicator Subsystem).

Not all utility functions can currently be replicated. In addition, some functions can only be replicated if they are initiated from Adabas Online System (AOS). The functions that are currently replicated include:

Adding new fields. The ADADBS NEWFIELD batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Changing a field length. The ADADBS CHANGE batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Deleting a file. The ADADBS DELETE batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Refreshing (emptying) a file. The ADADBS REFRESH batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Renaming a file. The ADADBS RENAME batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Reusing ISNs. The ADADBS ISNREUSE batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Reusing data storage blocks. The ADADBS DSREUSE batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Modifying FCB parameters. The ADADBS MODFCB batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Releasing a descriptor. The ADADBS RELEASE batch utility function can be replicated as well as the equivalent AOS and AMA functions.

Defining a file. The Adabas Online System functions can be replicated.

Defining a new FDT. The Adabas Online System functions can be replicated.

Note:

Event Replicator for Adabas supports the replication of data associated with an

ADALOD LOAD or ADALOD UPDATE functions. For more information on this support,

read ADALOD LOAD

Parameters and

ADALOD UPDATE

Parameters.

For complete information on these Adabas utility functions, refer to your online Adabas utilities or Adabas Online System documentation.

Password protection on the target file or database is not supported

Expanded files are not supported. Any segmentation of a file is hidden to the target. So, file deletion functions operate on the logical file.

The functions defining a new FDT, adding new fields, or defining a file, are replicated to all Event Replicator Servers known to the database nucleus. This is because the Event Replicator Server ID is not yet defined for the file (ADADBS REPLICATION function), so all Event Replicator Servers are informed. The functions are only replicated if the Event Replicator Server includes the file in a subscription with appropriate destination settings.

Replicating Adabas utility functions is controlled by the destination definitions associated with your subscriptions. Using the DREPLICATEUTI parameter in a destination definition, you can control whether utility functions are replicated for that destination. In addition, for Adabas destinations, you can use the DAREPLICATEUTI parameter to control whether utility functions are replicated to a specific target database and file.

For more information about the DREPLICATEUTI and DAREPLICATEUTI parameters in destination definitions, read about the Destination Parameters. For information on using the Adabas Event Replicator Subsystem to maintain destination definitions, read Maintaining Destination Definitions Using the Adabas Event Replicator Subsystem.

Suppose you have an Adabas database with a database ID of 241. In addition, suppose Event Replicator Server 65535 destination and subscription definitions look like this:

ADARPD DATABASE ID=240,DBCONNECT=NO * ADARPD DESTINATION NAME=ADA240 ADARPD DREPLICATEUTI=YES ADARPD DTYPE=ADABAS ADARPD DAIFILE=151,DAIDBID=241,DATDBID=240,DATFILE=51 ADARPD DAREPLICATEUTI=YES * ADARPD SUBSCRIPTION NAME=TICKER ADARPD SDESTINATION='ADA240' ADARPD SFILE=151,SFDBID=241,SFBAI='AA-ZZ.' *

The TICKER subscription on Event Replicator Server 65535 is set up to replicate data from file 151 on database 241 using destination ADA240. However, suppose neither database file 151 or file 51 are loaded in their respective databases. The following processing can occur:

Define the new FDT and file 151 using Adabas Online System on database 241.

Since database 241 runs with REPLICATION=YES, the define operations for file 151 are sent to Event Replicator Server 65535.

The TICKER subscription sends changes from file 151 to destination ADA240 (database 240). Because destination ADA240 has DREPLICATEUTI=YES, it replicates the utility functions it receives in general. In addition, because DAREPLICATEUTI=YES for the target database 240, file 51, the define utility function is replicated to that specific target as file 51. In other words, new file 51 is defined using the definition specifications for file 151.

| Warning: If a file 51 already exists on database 240, this definition will fail and so will replication to this target. Take care when specifying target specifications for such operations. |

You can now activate replication for file 151 (ADADBS REPLICATION FILE=151,ON,TARGET=65535). This will allow user transactions and utility functions from file 151 on database 241 to be replicated to Event Replicator Server 65535, which will, in turn, replicate them to file 51 on database 240.

Event Replicator for Adabas and the utility ADARIS are able to support replication of source and target files protected with Adabas password or cipher code in conjunction with Adabas SAF Security Version 8.2.2 (and above) and RACF. This includes the replication of encrypted data. Note that it is important that AX822007 has been applied.

The source or target nuclei do not need to have Adabas SAF enabled in their address space or load library. However, you need to create the RACF PROFILE for the files since they use the INSTDATA fields of the profiles as a secured repository for the Cipher and Passwords for the file.

Only the Event Replicator Server itself needs ADASAF enabled and this is where the SAFCFG parameters are generated. The SAFCFG parameters tell the Event Replicator Server what security class to look for and the specific "File Profiles" that will be used. These contain the Cipher and passwords in the INSTDATA field.

The resources for these databases and/or files and the passwords and/or cipher codes need to be defined in RACF. Refer to the Passwords and Cipher Codes section in the Adabas SAF Security Operations documentation for information on how to define passwords and cipher codes to RACF. After a successful installation, the Event Replicator Server nucleus should start with Adabas SAF Security activated.

During the replication process, the Event Replicator Server nucleus will automatically detect if a file is ciphered and will automatically invoke Adabas SAF security to lookup the cipher code from the RACF database. The Cipher Code must have been defined to RACF in the INSTDATA field for the appropriate file resource profile.

For example, if the ciphered file being replicated is file 30 on database 50011, then the following is an example of how to define the necessary file resource profile along with the corresponding cipher code:

REDEFINE resource-class (CMD50011.FIL00030) OWNER(resource-owner)DATA(‘C=cipher-code’)

If a source file is ciphered and the cipher code is not available for the source, the source file will be deactivated and depending on the settings also the subscription that contains the source file.

The Event Replicator Server includes functionality to check for inconsistencies between files specified in one or more subscriptions in the Event Replicator Server versus files replicated in Adabas. These inconsistencies may be caused by one of the following instances:

A file may have replication turned on but not be referenced in a subscription in the related Event Replicator Server

A file may be specified in a subscription in an Event Replicator Server and either not have replication turned on in Adabas or have replication turned on in Adabas with a different Event Replicator Server ID.

The cross-check function also displays a message listing files contained in an Adabas nucleus that are defined to the Event Replicator but which are currently inactive; replication for these files will not occur.

The Event Replicator Server replication cross-check function executes when an Adabas database first connects with the Event Replicator Server. It can also be invoked using the RPLCHECK operator command.

The subscription logging facility , also know as the SLOG facility, can be used to ensure that data replicated to specific destinations is not lost if problems occur on your destinations. In order for this to occur, the SLOG facility must be activated for those destinations. For complete information on using the SLOG facility, read Using the Subscription Logging Facility.

Notes:

To reduce the risk of an Event Replicator Server replication pool becoming full when a destination cannot handle the rate at which replication transactions are sent to it by the Event Replicator, you can now request that incoming compressed replication transactions be written to the SLOG system file, before they are queued to the assignment phase. This means that during the input phase, the compressed transactions are stored first in the Event Replicator replication pool, but then written to the SLOG system file, freeing up the space in the Adabas nucleus and Event Replicator Server replication pools.

For more information on using the SLOG system file in this manner, read Reducing the Risk of Replication Pool Overflows.

Event Replicator for Adabas transaction logging (TLOG) allows you to log transaction data and events occurring within the Event Replicator address space. This information can be used as an audit trail of data that has been processed by the Event Replicator Server and of state change events that occurred during Event Replicator Server operations. In addition, it can be used to assist in the diagnosis of problems when replication does not work as expected.

For complete information, read Using Transaction Logging.

If Adabas terminates abnormally and restarts, it and the Event Replicator Server are usually able to recover any lost replication data and to deliver the normal stream of replication data to the target application.

This section covers the following topics:

During normal processing, Adabas writes control information to its Work data set to keep track of which replicated transactions the Event Replicator Server has confirmed as successfully processed. If Adabas terminates abnormally and then performs the autorestart at the beginning of the next session, it uses the protection data and control information in the Work data set to rebuild the replication data that existed in its replication pool at the time of the failure.

After reconnecting to the Event Replicator Server, Adabas:

deletes rebuilt replication data that the Event Replicator Server successfully processed,

keeps rebuilt replication data that the Event Replicator Server fully received but has not yet successfully processed, and

resends rebuilt replication data that the Event Replicator Server has not yet fully received.

Adabas marks all replication data that it recovers from the Work data set as possible resends and sends it to the Event Replicator Server (because the Event Replicator Server did not indicate it already received the data). The target application may receive such replication data twice (the second time marked as possible resend) if the Event Replicator Server did not stay active throughout the Adabas outage, because, in this case, the next instance of the Event Replicator Server does not know which replication data the previous instance had already successfully processed.

If the Event Replicator Server has not been able to process the replication data sent by Adabas in a timely manner, it is possible that Adabas will overwrite replication-related protection data on the Work data set that has not yet been successfully processed by the Event Replicator Server. If, in such a situation, Adabas abends, it will not be able to rebuild all replication data that existed in its replication pool at the time of the failure. In this case, Adabas prints messages detailing which replicated files may be involved in the loss of replication data.

No replication data is lost in an Adabas failure if, prior to the failure, the Event Replicator Server processed the replication data in a timely manner so that no replication-related protection data that has not been successfully processed is overwritten on the Work data set. The amount of protection data that can be held on the Work data set before it is overwritten is determined by the ADARUN LP parameter setting of Adabas. Increasing the LP parameter setting provides for a greater safety margin against the overwrite of protection data related to unprocessed replication data.

No replication data is lost in an Adabas failure if the Event Replicator Server stayed active throughout the Adabas outage even though replication-related protection data that has not been successfully processed may have been overwritten on the Work data set. This is because the replication data that Adabas is unable to rebuild from the Work data set is still present in the Event Replicator replication pool. After reconnecting to the Event Replicator Server, Adabas prints messages indicating that although the replication-related protection data that has not been successfully processed was overwritten on the Work data set, no replication data was actually lost.

Furthermore, in the case of the possible loss of replication data, the Event Replicator Server issues a status message to the target application indicating this condition for every subscription-destination related to any affected file.

If, after the session autorestart, Adabas abends again before the Event Replicator Server has received and processed all of the rebuilt replication data, Adabas will in the following second session autorestart again rebuild the relevant replication data from the information on its Work data set and, if necessary, resend it to the Event Replicator Server. This is possible as long as the protection data related to the as yet unprocessed replication data has not been overwritten on the Work data set.

If the session autorestart after an Adabas abend consistently fails for a replication-related reason, it is possible to restart Adabas with REPLICATION=NO. This makes Adabas perform the session autorestart without any attempt to recover replication data. It is an emergency measure to get Adabas back up, but disables replication processing. The replication-related parameters of the files that used to be replicated must be defined again and the original files and their replicas must be brought back in sync.

In a cluster database, the cluster nucleus performing the recovery process makes the Work data sets of the other nuclei (logically) empty at the end of the recovery. Replication data originating from the other nuclei can no longer be rebuilt after successful recovery. To avoid the loss of this replication data when another failure occurs before the recovered replication data has been sent to and processed by the Event Replicator Server, the nucleus performing the recovery process writes all replication data originating from the other nuclei to its own Work data set. Then, even if the first nucleus fails before sending all recovered replication data to the Event Replicator Server, these replication data will be recovered again from where it was written in the first recovery process.

A cluster nucleus writing replication data to the Work data set during recovery incurs a greater risk of a Work data set overflow. If a Work data set overflow occurs in a cluster database and an existing peer nucleus also gets a Work data set overflow during the recovery process, use the following procedure to recover without the need to restore and regenerate the database:

Define (or use) a new cluster nucleus that has not been active at the time of the Work data set overflow. Define a large LP parameter for this new nucleus. An LP value equal to the sum of the LP values of all cluster nuclei that were active at the time of the first failure should be sufficient.

Start the new cluster nucleus with the large LP parameter to let it perform the recovery process. It will read the protection and replication data from the Work data sets of the failed peer nuclei and write new protection and replication data to its own Work data set. The latter one can be made as large as necessary to resolve the Work overflow condition.

This procedure of resolving a Work data set overflow applies to all cluster nuclei, with or without replication, but is especially important for cluster nuclei with replication, as they have a greater risk of Work overflow during recovery.

Event Replicator for Adabas can be used with DTP=RM databases that have transactions coordinated by Adabas Transaction Manager. Replication takes place near real-time in the same way as it does for DTP=NO databases.