This document covers the following topics:

Adabas can now use character sets other than those based on the Latin alphabet and can retrieve data that use these character sets in the correct collating sequence.

In most cases, an Asian text character cannot be encoded using a single byte. For example, Japanese with more than 10,000 characters in its set is encoded using two or more bytes per character. Because of the encoding required, these are called double-byte character sets (DBCS) or multiple-byte character sets (MBCS) as opposed to the single-byte character sets (SBCS) characteristic of most Western languages.

There has been a standardisation of these character sets by the Unicode consortium in the Unicode standard, - please refer to the Unicode homepage at http://www.unicode.org/ for further information. An example of a DBCS is UCS-2, which contains all characters of Unicode Version 1.1, and an example of an MBCS is UTF-8, which represents all characters of the current Unicode version in 1 to 4 characters.

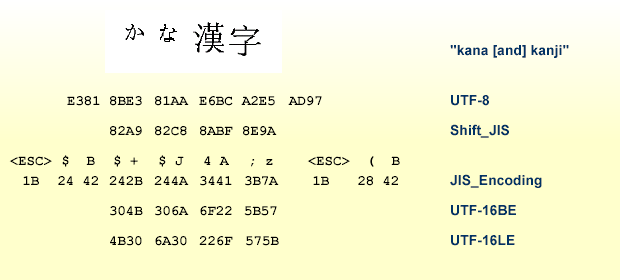

In the figure below, the Japanese kana (first two) and kanji (second two) characters are shown in a variety of encodings:

Notes:

Earlier versions of Adabas stored DBCS-encoded data in alphanumeric fields, but Adabas had no knowledge of the character sets. Applications had to know which character sets were used, and the applications themselves were responsible for conversions if they required the data in another character set. This, of course, is still possible with Adabas Version 5, but it now also accommodates DBCS and MBCS much more suitably by the introduction of the wide-character or Unicode (W) field format.

The new field format W has been created to handle double- and multiple-byte characters. The size of the W-format fields in bytes, like alphanumeric fields, is either a standard length defined in the FDT or a variable length. A W-format field can have the same field options as an alphanumeric field, except for the NV option.

A W-format field can be defined as a parent of a super-/subdescriptor; it cannot be defined as a parent of a hyperdescriptor or a phonetic descriptor.

If a superdescriptor contains wide-character (W) fields, the format of the superdescriptor is A.

All data for wide-character formatted fields is stored internally in UTF-8, but the Adabas user can specify the external encoding for a user session in the record buffer of the OP command or with the compression/decompression utilities ADACMP/ADADCU.

Notes:

If you have text fields, you usually do not want to order them according to their byte sequence; usually the required collation sequence is language dependent, for example, in Spanish "LL" is considered to be one character, unlike in other languages. Also, for the same language, many different collations are possible. For example, there may be different collations for phonebooks and for book indices. Uppercase/lowercase characters, hyphens in words (e.g., "coop" versus "co-op"), diacritic marks (e.g. umlauts, accent marks in such words as "résumé" versus "resume") may affect sequencing, or they may be ignored.

The sequencing rules for each collation are implemented in routines that generate a collation key for each text field value; Adabas uses ICU to do this.

A collation descriptor is a descriptor that is based on an ICU collating key for a Unicode field, where the ICU collating key is a binary string produced from the original character string by applying a Unicode Collation Algorithm and language-specific rules. When you perform a binary comparison between the collating keys produced this way for character strings, you perform a comparison between the strings that is appropriate to your locale.

Universal encoding support (UES) makes it possible for Adabas to process text data provided in any encoding supported by ICU, and to return text data in this encoding. With earlier versions of Adabas, it was only possible to convert data for Adabas buffers between different machine architectures (ASCII, EBCDIC) with one fixed translation table - it was not possible to convert between more than one ASCII and one EBCDIC derived character set.